Leaning on Algo to route Docker traffic through Wireguard

Published on:Table of Contents

Part of the Wireguard series:

- Wireguard VPN

- Routing Select Docker Containers through Wireguard VPN

- Viewing WireGuard Traffic with Tcpdump

- Leaning on Algo to route Docker traffic through Wireguard (most recent and consolidates the previous articels)

I write about Wireguard often. It’s been a bit over a year since my initial article and a lot has changed. Enter algo. Within the last year, it has added support for Wireguard. While algo sets up a bit more than Wireguard, it is unparalleled in ease of deployment. After a couple of prompts, the server will be initialized and configs generated for your users.

In this article I’m going to touch on everything that I’ve written about before and try consolidate everything into a single post that I can refer back to. This article fits more into an advanced usage of Algo and wireguard though I try and make it clear when one can jettison the article if an advanced setup is not necessary.

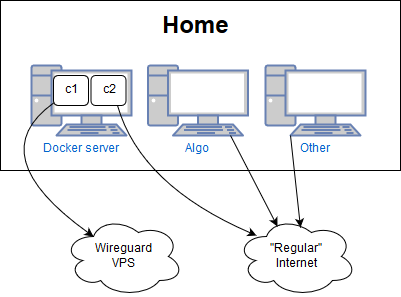

The end goal is to setup a docker server where a subset of the containers are routed through our wireguard interface, as illustrated below. I will also touch upon routing an entire machine’s traffic through the VPN, which is a much easier process.

Algo setup for my home network

Algo Preface

There are many ways to install algo, but I am opinionated. I will illustrate my way, but feel free to deviate.

Algo should be installed on a local machine. I use an Ubuntu VM, but you should also be able to work on Windows via WSL or on a Mac. While algo supports a local installation (where algo is installed on the same server as the VPN), I recommend against it as an algo installation will contain the configurations, and the private and public keys for all users – things that shouldn’t be on such a disposable server.

Algo does have an official docker image (15 pulls as of this writing, and I’m pretty sure I’m half of them). To clarify, this is not the VPN, the algo docker image is merely a way to simplify installing dependencies to run algo. Unfortunately since algo does not have any formal releases at this time, when I tried using the docker image the configuration code was out of date with the bleeding edge config in the repo, and caused DNS issues that made me scratch my head for several hours.

Now we choose a VPS provider. Literally any of them should be fine. I happen to like Vultr – they have a lot of options (like a $3.5/mo plan!). They are perfect for disposable servers. When testing out my methodology, I think I spun up a couple dozen servers in my attempts and in the end it only cost me 20 or so cents. So assuming the reader is following along, the post will contain a very small number of vultr specific instructions.

Algo Setup

Before we get started, grab your api key from vultr: https://my.vultr.com/settings/#settingsapi. We need to save this key so algo can access.

We’ll be executing the following on our algo machine (which, to reiterate, is a machine sitting next to you or close by).

# Fill me in

VULTR_API_KEY=

# Installs the minimal dependencies (python 2 and git) to get algo up and

# going. It is possible to go a more flexible route by using pyenv, but that may be

# too developer centric and confusing for most people. Not to mention, most

# likely python, pip, and git are already installed

apt install -y python python-pip git

# There are no released versions of algo, so we have to work off the bleeding edge

git clone https://github.com/trailofbits/algo.git

cd algo

# The file that algo will use to read our API key to create our server

cat > vultr.ini <<EOF

[default]

key = $VULTR_API_KEY

EOF

# Set the key so that only we can read it. Wouldn't want other users on the

# system to know the key to mess with our vultr account

chmod 600 vultr.ini

# Using pipenv here greatly simplifies the installing of python dependencies

# into an isolated environment, so it won't pollute the main system

pip install --user pipenv

pipenv install --skip-lock -r requirements.txt

${EDITOR:-vi} config.cfg

Up comes the editor. Edit the users who will be using this VPN. Keep in mind, algo will ask if you want the possibility of modifying this list, so one should have a pretty good idea of the users. It is more secure to disable modifications of users after setup. It may even be easier to spin up an entirely new VPN with all the users and migrate existing users.

# Start the show

pipenv run ./algo

Answer the questions as you see fit, except for one. Answer yes to:

Do you want to install a DNS resolver on this VPN server, to block ads while surfing?

We’ll need it to resolve DNS queries.

You also don’t need to input anything for the following question (as algo will pick it up automatically)

Enter the local path to your configuration INI file

Let the command run. It may take 10 minutes if you’re setting up a server halfway around the world.

Once algo is finished and you are not interested in routing select docker traffic through a wireguard interface, you can skip the rest of the article and read the official wireguard documentation on configuring clients.

Routing select docker traffic through Wireguard

Make sure that wireguard is already installed on the box that we’ll be running docker.

Before we hop onto that, we need to modify the wireguard config files that algo created. These config files are in wg-quick format, and while amazingly convenient, it will route all traffic through the VPN, which may be undesirable. Instead we’re going to employ a method that will send specific docker container traffic through the VPN.

First, we need to remove the wg-quick incompatibilities for our docker user (one of the users created in config.cfg):

# the wireguard user

CLIENT_USER=docker-user

# grab the client's VPN address. If more than one VPN server, this script will

# need to be modified to reference the correct config

CLIENT_ADDRESS=$(grep Address configs/*/wireguard/$CLIENT_USER.conf | \

grep -P -o "[\d/\.]+" | head -1)

# Calculate the client's wireguard config sans wg-quick-isms.

CLIENT_CONFIG=$(sed '/Address/d; /DNS/d' configs/*/wireguard/$CLIENT_USER.conf)

# The docker subnet for our containers to run in

DOCKER_SUBNET=10.193.0.0/16

# Generate the Debian interface configuration. While this configuration is

# debian specific, the `ip` commands are linux distro agnostic, so consult your

# distro's documentation on setting up interfaces. These commands are adapted

# wg-quick but are suited for an isolated interface

INTERFACE_CONFIG=$(

cat <<EOF

# interfaces marked "auto" are brought up at boot time.

auto wg1

iface wg1 inet manual

# Resolve dns through the dns server setup on our wireguard server

dns-nameserver 172.16.0.1

# Create a wireguard interface (device) named 'wg1'. The kernel knows what a

# wireguard interface is as we've already installed the kernel module

pre-up ip link add dev wg1 type wireguard

# Setup the wireguard interface with the config calculated earlier

pre-up wg setconf wg1 /etc/wireguard/wg1.conf

# Give our wireguard the client address the server expects our key to come from

pre-up ip address add $CLIENT_ADDRESS dev wg1

up ip link set up dev wg1

# Mark traffic emanating from our select docker containers into table 200

post-up ip rule add from $DOCKER_SUBNET table 200

# Route table 200 traffic through our wireguard interface

post-up ip route add default via ${CLIENT_ADDRESS%/*} table 200

# rp_filter is reverse path filtering. By default it will ensure that the

# source of the received packet belongs to the receiving interface. While a nice

# default, it will block data for our VPN client. By switching it to '2' we only

# drop the packet if it is not routable through any of the defined interfaces.

post-up sysctl -w net.ipv4.conf.all.rp_filter=2

# Delete the iterface when ifdown wg1 is executed

post-down ip link del dev wg1

EOF

)

```bash

Check the results: `CLIENT_CONFIG` should look a little like:

```ini

[Interface]

PrivateKey = notmyprivatekeysodonottry

[Peer]

PublicKey = notthepublickeyeither

AllowedIPs = 0.0.0.0/0, ::/0

Endpoint = 100.100.100.100:51820

PersistentKeepalive = 25

Copy this CLIENT_CONFIG into /etc/wireguard/wg1.conf onto the docker machine

Copy INTERFACE_CONFIG into /etc/network/interfaces.d/wg1 onto the docker machine.

Setup Docker Networking

Create our docker network:

docker network create docker-vpn0 --subnet $DOCKER_SUBNET

At this point, if running Debian, you should be able to execute ifup wg1 successfully. And to test wireguard execute:

curl --interface wg1 'http://httpbin.org/ip'

The ip returned should be the same as the Endpoint option.

It’s also important to test that our DNS is setup appropriately, as our wireguard server may resolve hosts differently. Additionally, we don’t want to leak DNS if that is important to you. Testing DNS is a little bit more nuanced as one can’t provide an interface for dig to use, so we use emulate it by executing a dns query that comes from our docker subnet.

dig -b ${DOCKER_SUBNET%/*} @172.16.0.1 www.google.com

You can then geolocate the given IPs and see if they are located in the same area as the server (this really only works for domains that use anycast). Another way to verify is to execute tcpdump -n -v -i wg1 port 53 and see the dig command successfully communicate with 172.16.0.1 (alternatively one can verify that no traffic was sent on eth0 port 53).

To top our tests off:

docker run --rm --dns 172.16.0.1 \

--network docker-vpn0 \

appropriate/curl http://httpbin.org/ip

Providing the --dns flag is critical, else docker will delegate to the host’s machine /etc/resolv.conf, which we are trying to circumnavigate with our wg1 interface config!

We won’t be having a perma docker network, so delete the network we created.

docker network rm docker-vpn0

Docker Compose

Instead we’ll be using docker compose, which encapsulates commandline arguments better. We’ll have docker compose create the subnet and dns for our container.

version: '3'

services:

curl:

image: 'appropriate/curl'

dns: '172.16.0.1'

networks:

docker-vpn0: {}

networks:

docker-vpn0:

ipam:

config:

- subnet: 10.193.0.0/16

While the compose file ends up being more lines, the file can be checked into source control and more easily remembered and communicated to others.

docker-compose up

And we’re done! Straying from official algo and wireguard docs did add a bit of boilerplate but hopefully it isn’t much and it’s easy to maintain.

I must mention that solution #1 in a previous article did not earn a shoutout here. To recap, solution #1 encapsulated wireguard into a docker container and routed other containers through the wireguard container. Initially, this was my top choice, but I’ve switched to the method illustrated here for reasons partly explained in that previous article. Wireguard is light enough and easy enough to gain insight through standard linux tools that having the underlying OS manage the interface outweighs the benefits brought by an encapsulated wireguard container.

Comments

If you'd like to leave a comment, please email [email protected]

Hi, thanks for the great article! I don’t know if I’m missing something, but it’s not working 100% of the time. On the Docker host, the following commands (curl –interface wg1 ‘http://httpbin.org/ip' and dig -b ${DOCKER_SUBNET%/*} @172.16.0.1 www.google.com) work just fine. However, in the container, DNS requests work fine, but HTTP requests fail most of the time… I’m running armbian on a Rock64 board. Thanks!