Turning Dropwizard Performance up to Eleven

Published on:Table of Contents

Banner generated through trianglify

Introduction

The Dropwizard web framework, simplifies java web development significantly. What I’m interested in, is how much overhead are we accepting compared to a more raw solution because if you look at the TechEmpower Web Framework Benchmarks Round 11 Json Serialization, you’ll notice Dropwizard is a fifth of the requests processed of what the raw server is built upon (Jetty). I decided to investigate why and if there is anything we can do to improve performance.

Tools for Investigation

For HTTP benchmarking, I recommend using at least the 4.0 version of wrk. If your package manager only contains an earlier version, I’d recommend building wrk from source because it is one of the easier packages to do so.

For Java profiling, I’ll be using Java’s

VisualVM, which comes bundled in the JDK. Launch

VisualVM on the host machine and point it to the remote host where the

benchmarking will take place. In order to expose the metrics, the java

invocation has to change. Below is an example that will expose the information

on port 3333. Since no authentication or ssl is enabled, ensure that the

benchmarking box isn’t publicly accessible.

java -Dcom.sun.management.jmxremote.ssl=false \

-Dcom.sun.management.jmxremote.authenticate=false \

-Dcom.sun.management.jmxremote.rmi.port=3333 \

-Dcom.sun.management.jmxremote.port=3333 \

-Djava.rmi.server.hostname=<hostname> \

-jar sample-1.0.jar server config.yaml

# hostname, in this instance, is the hostname you will be connecting with in

# visualvm. This is critical. I had proxy server port forwarding to java server.

# Both visualvm and the java server had to reference the proxy server's hostname

# for it to work.

# ssh -L 0.0.0.0:3333:<java server>:3333

We’ll start by profiling the tutorial app on an 8 core, 4GB RAM ubuntu server VM. Let the profiling begin!

The Logging Problem

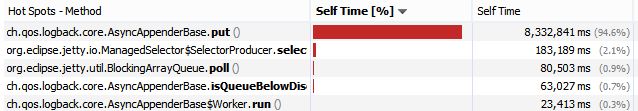

On first profiling, I immediately noticed a problem and maybe you’ll notice it too:

Logging can be a CPU hot spot

Logging accounted for >95% of the sampled time! And since the only logging that

should be going on is request logging, almost all the time spent processing is

logging requests. Before any questions are raised, I had the logging output

redirected to /dev/null, so we’re not even measuring the performance of my

disk!

This is when I checked the TechEmpower benchmarks and realized that the frameworks I looked at do no logging.

Others have

investigated

increasing logging performance, which we can apply some to Dropwizard. One of

them being the logger’s queueSize.

server:

requestLog:

appenders:

- type: console

queueSize: 2048

The default queue size is Logback’s default queue size, which is 256. From Logback’s documentation:

When the queue size is greater than one, new events are enqueued, assuming that there is space available in the queue. Using a queue length greater than one can improve performance by eliminating delays caused by transient network delays.

Increasing the queue size did have a noticeable impact on performance: from 10,000 to 13,500 thousand requests per second. Not bad.

Custom Jackson Serializer

Let’s wish the logging problem away (by settings appenders to an empty list).

In reality, an environment may have the ability that mitigates the logging

problem.

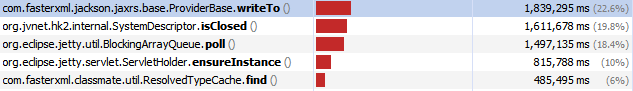

Running the benchmark tool and profiler, what’s the next bottleneck?

CPU hot spots after removing logging

Ah, looks like Jackson, the Java

serialization library. Jackson takes our Java objects (Saying in the

tutorial) and turns it into JSON. There is some overhead as Jackson has to use

reflection in order to figure out how to create the JSON.

One should shudder when the word ‘reflection’ is used in a performance post. We can help Jackson out by creating a custom serializer class that doesn’t need reflection.

public class SayingSerializer extends JsonSerializer<Saying> {

@Override

public void serialize(Saying saying, JsonGenerator json, SerializerProvider provider)

throws IOException {

json.writeStartObject();

json.writeNumberField("id", saying.getId());

json.writeStringField("content", saying.getContent());

json.writeEndObject();

}

}

This technique may not see a large increase performance partly because Dropwizard ships Jackson with the Jackson Afterburner module installed, which optimizes common data binding scenarios. In a couple tests, I was able to see Dropwizard serve close to 20,000 requests a second, but still Jackson took up a large chunk of time in the profiling output.

Streaming Jackson Serializer

We can eliminate all Jackson overhead by using its streaming API. In order to incorporate the streaming API, method signatures need to be changed and potentially the structure of endpoint will need to be rethought. Below is how the tutorial’s endpoint will change.

@GET

@Timed

public StreamingOutput sayHello(@QueryParam("name") @Length(max=5) Optional<String> name) {

final String value = String.format(template, name.or(defaultName));

return output -> {

try (final JsonGenerator json = jsonFactory.createGenerator(output)) {

json.writeStartObject();

json.writeNumberField("id", counter.incrementAndGet());

json.writeStringField("content", value);

json.writeEndObject();

}

};

}

What a change! At 30,000 requests a second, the streaming Jackson solution is 3x as efficient as the tutorial. After serialization optimization, hk2, which is a dependency injection framework used by Jersey, started to become more of a bottleneck; recorded as 33% of self time. Eliminating Jersey would fix this.

From here on out, since the streaming serializer yielded such a performance increase, this will be our serialization method of choice.

Validation Aside

The endpoints contain validation annotations because I thought that the sad path would create performance problems. However, throughout testing, I found no evidence that validation errors, which will cause an exception to be thrown, have a performance impact. This is good news for those that have found that validation annotations and exceptions simplify the main logic of their endpoint.

Dropwizard Servlet

So let’s eschew Jersey and use raw Java Servlets.

package com.example;

import com.fasterxml.jackson.core.JsonFactory;

import com.fasterxml.jackson.core.JsonGenerator;

import com.google.common.base.Strings;

import io.dropwizard.Application;

import io.dropwizard.setup.Environment;

import org.eclipse.jetty.servlet.ServletHolder;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.io.IOException;

import java.util.concurrent.atomic.AtomicLong;

public class DropRawApplication extends Application<DropRawConfiguration> {

public static void main(final String[] args) throws Exception {

new DropRawApplication().run(args);

}

@Override

public String getName() {

return "DropRaw";

}

@Override

public void run(final DropRawConfiguration config,

final Environment env) {

final DropRawServlet servlet = new DropRawServlet(env.getObjectMapper().getFactory(),

config.getTemplate(), config.getDefaultName());

env.getApplicationContext().addServlet(new ServletHolder(servlet), "/perf");

}

public static class DropRawServlet extends HttpServlet {

private final JsonFactory jsonFactory;

private final String template;

private final String defaultName;

private final AtomicLong counter = new AtomicLong();

public DropRawServlet(JsonFactory jsonFactory, String template, String defaultName) {

this.jsonFactory = jsonFactory;

this.template = template;

this.defaultName = defaultName ;

}

@Override

protected void doGet(HttpServletRequest req, HttpServletResponse resp) throws ServletException, IOException {

final String name = Strings.nullToEmpty(req.getParameter("name"));

resp.setContentType("application/json");

if (name.length() > 5) {

resp.setStatus(HttpServletResponse.SC_BAD_REQUEST);

try (final JsonGenerator json = jsonFactory.createGenerator(resp.getOutputStream())) {

json.writeStartObject();

json.writeStringField("error", "Query parameter name must be shorter than 5 letters");

json.writeEndObject();

return;

}

}

resp.setStatus(HttpServletResponse.SC_OK);

try (final JsonGenerator json = jsonFactory.createGenerator(resp.getOutputStream())) {

json.writeStartObject();

json.writeNumberField("id", counter.incrementAndGet());

json.writeStringField("content", String.format(template, name.length() != 0 ? name : defaultName));

json.writeEndObject();

}

}

}

}

Notice that we keep all the goodness that is Dropwizard, and there is probably less code in this example than in the actual Dropwizard tutorial.

Performance increases dramatically, rising to 100,000 requests a second (a 10x increase over the tutorial). Sampling is now showing an increasing amount of time that Jetty is polling for incoming requests, signalling that our benchmarking tool is becoming stretched.

One can combine this servlet approach with a traditional Jersey approach.

Notice that I put the Dropwizard servlet on the /perf path, which allows the

yaml configuration to specify a rootPath of something other than /* (eg,

/app), such that /perf requests are directed to the raw servlet and /app

requests are sent to Jersey.

For those that want Dropwizard but not Jersey, this is certainly the way forward.

Jetty Servlet

Dropping down another level, we can forgo all the goodness that Dropwizard brings to the table and code servlets right into Jetty

package com.example.raw.jetty;

import com.fasterxml.jackson.core.JsonFactory;

import com.fasterxml.jackson.core.JsonGenerator;

import com.google.common.base.Strings;

import org.eclipse.jetty.server.Server;

import org.eclipse.jetty.servlet.ServletHandler;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.io.IOException;

import java.util.concurrent.atomic.AtomicLong;

/** Replicates the same happy path as the Dropwizard getting started example without

* and configuration builtin

*/

public class JettyServlet {

private static final String defaultName = "Nick" ;

private static final String template = "Hello %s!";

private static final JsonFactory jsonFactory = new JsonFactory();

private static final AtomicLong counter = new AtomicLong();

public static void main( String[] args ) throws Exception {

Server server = new Server(8095);

ServletHandler handler = new ServletHandler();

server.setHandler(handler);

handler.addServletWithMapping(DropwizardExampleHandler.class, "/*");

server.start();

server.join();

}

public static class DropwizardExampleHandler extends HttpServlet {

@Override

protected void doGet(HttpServletRequest request, HttpServletResponse response )

throws ServletException, IOException {

final String name = Strings.nullToEmpty(request.getParameter("name"));

response.setContentType("application/json");

if (name.length() > 5) {

response.setStatus(HttpServletResponse.SC_BAD_REQUEST);

try (final JsonGenerator json = jsonFactory.createGenerator(response.getOutputStream())) {

json.writeStartObject();

json.writeStringField("error", "Query parameter name must be shorter than 5 letters");

json.writeEndObject();

return;

}

}

response.setStatus(HttpServletResponse.SC_OK);

try (final JsonGenerator json = jsonFactory.createGenerator(response.getOutputStream())) {

json.writeStartObject();

json.writeNumberField("id", counter.incrementAndGet());

json.writeStringField("content", String.format(template, name.length() != 0 ? name : defaultName));

json.writeEndObject();

}

}

}

}

The performance does increase to 120,000 requests a second, but I’d like to de-emphasize this approach because you lose:

- Yaml configuration

- Configured Jackson

- Command line parsing

- Metrics

Raw Jetty

Jetty contains its own API for specifying how to handle requests and responses that is different than the servlet API.

package com.example.raw.jetty;

import com.fasterxml.jackson.core.JsonFactory;

import com.fasterxml.jackson.core.JsonGenerator;

import com.google.common.base.Strings;

import org.eclipse.jetty.http.HttpField;

import org.eclipse.jetty.http.HttpHeader;

import org.eclipse.jetty.http.PreEncodedHttpField;

import org.eclipse.jetty.server.Request;

import org.eclipse.jetty.server.Server;

import org.eclipse.jetty.server.handler.AbstractHandler;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import java.io.IOException;

import java.util.concurrent.atomic.AtomicLong;

/** Replicates the same happy path as the Dropwizard getting started example without

* and configuration builtin

*/

public class RawJetty {

private static final String defaultName = "Nick" ;

private static final String template = "Hello %s!";

private static final JsonFactory jsonFactory = new JsonFactory();

private static final AtomicLong counter = new AtomicLong();

public static void main( String[] args ) throws Exception {

Server server = new Server(8095);

server.setHandler(new DropwizardExampleHandler());

server.start();

server.join();

}

public static class DropwizardExampleHandler extends AbstractHandler {

private static final HttpField contentType = new PreEncodedHttpField(HttpHeader.CONTENT_TYPE, "application/json");

@Override

public void handle(String s, Request baseRequest, HttpServletRequest request, HttpServletResponse response)

throws IOException, ServletException {

baseRequest.setHandled(true);

final String name = Strings.nullToEmpty(request.getParameter("name"));

baseRequest.getResponse().getHttpFields().add(contentType);

if (name.length() > 5) {

response.setStatus(HttpServletResponse.SC_BAD_REQUEST);

try (final JsonGenerator json = jsonFactory.createGenerator(response.getOutputStream())) {

json.writeStartObject();

json.writeStringField("error", "Query parameter name must be shorter than 5 letters");

json.writeEndObject();

return;

}

}

response.setStatus(HttpServletResponse.SC_OK);

try (final JsonGenerator json = jsonFactory.createGenerator(response.getOutputStream())) {

json.writeStartObject();

json.writeNumberField("id", counter.incrementAndGet());

json.writeStringField("content", String.format(template, name.length() != 0 ? name : defaultName));

json.writeEndObject();

}

}

}

}

The performance of this method is phenomenal. The TechEmpower benchmarks registers it as almost twice as fast as the servlet version. In my profiling, the results showed that the vast majority of the time Jetty was twiddling its thumbs. So even though this version only registered 10,000 more requests per second than the Jetty servlet version, the bottleneck was with the wrk, the benchmark tool.

Conclusion

Here are the numbers consolidated from the profiling different methods. Remember:

- All numbers would be significantly lower if request logging was enabled, as >95% of the time sampled is in logging.

- Methods with >=100,000 req/s are starting to become bottlenecked by the benchmarking tool

| type | req/s |

|---|---|

| raw jetty | 130,000 |

| jetty servlet | 120,000 |

| dropwizard servlet | 100,000 |

| dropwizard sample | 10,000 |

| log queue size | 13,500 |

| jackson serializer | 20,000 |

| jackson streaming | 30,000 |

The numbers in the TechEmpower web framework benchmarks are inflated because request logging is disabled, and I believe that request logging is an important aspect of a web framework. There should always be an indication of a request (an audit log, of sorts). It’s possible to circumvent this requirement by using a metrics framework (like one bundled in Dropwizard), but one would be sacrificing accuracy and convenience that a request log provides.

If your application can disable all logging aside from exceptional logging, and the API produced is simple, use the Dropwizard Servlet technique shown for a 10x increase, else for a more complex API, stream the response back to the client using Jackon’s streaming API for a 3x increase.

As always benchmark the code, and make sure you give the web application plenty of time to warm up before recording times.

Comments

If you'd like to leave a comment, please email [email protected]

I was trying to figure out where the slowdown was in Dropwizard compared to raw servlets on the benchmark. It was eye opening and a sort of duh moment when you isolated Jersey. Of course, it’s reflection!

In fact, play and sparkjava likely suffer from the same affliction.

The annotations are useful and clean but its nice to be able to bust out the servlet for the small number of heavily used calls (e.g. logging user events).

Anyway, thanks for the post :)

Also found this post really helpful for just learning the pieces of Dropwizard, how they come together and their cost. Thanks.

I have a question … how could you take the load to 10,000 RPS . I wrote a go client and with more than 400 RPS its giving connection reset by peer issue . Dropwizard configuration is as follows :

Note: I am tring to load test this on a local mac machine , 16GB RAM and 2.2 GHz Intel Core i7. Intially the go code running was giving a to many open files error which I fixed by increasing the file limit.

My guess is you’re running into macOS’s default openfiles limit, any sort of high load http testing esp. if keepalive isn’t getting used will quickly hit the limit.