Results of Authoring a JS Library with Rust and Wasm

Published on:Table of Contents

I recently completed a major overhaul of a Javascript library of mine. This overhaul replaced a pure JS parser (generated by jison) with one that delegated parsing to an implementation written in Rust compiled to Webassembly (Wasm). I want to share my journey, as overall it’s been positive and I expect that other JS libraries could benefit from this approach.

Introduction

The parser in question turns a proprietary format that is akin to JSON and looks like

tag=AAA

name="Jåhkåmåhkke"

core=BBB

buildings={ 1 2 }

core=CCC

and returns a plain javascript object

{

tag: "AAA",

name: "Jåhkåmåhkke",

core: ["BBB", "CCC"],

buildings: [1, 2]

}

So what were the pain points of the original JS implementation?

Original Motivation

Soon after releasing the JS library I stopped actively developing it. The files that I want to parse are millions of lines long which could result in tens of millions of JS values (numbers, strings, arrays, objects, and dates). Parsing and allocating this many objects was taxing enough that back in 2015 I found no way to practically run it – the memory pressure caused nodejs to run out of heap space and when the code didn’t crash, the time required to parse said files would easily reach 30-60 seconds.

In 2020, I wrote this parser in Rust. The results have been fantastic: zero-copy parsing at 1 GB/s.

But speed wasn’t the only contributing factor. In fact, I firmly believe that someone could design a performant (but maybe not as performant) of an API around this format in pure JS. The other big motivating factor is accuracy and consistency. In no way do I own or control this proprietary format, so new syntax is stumbled upon all the time. Most recently data like

levels = { 10 0=1 0=2 }

color = hex { eeeeee }

color = rgb { 0 100 150 }

So when I doubled down to cover all known syntax in Rust, I couldn’t imagine duplicating that effort for other languages and then keeping the parsing logic in sync. The Rust parser has been battle proven in multiple projects and I don’t want to start from scratch for a new language. To me, it seems preferable to have one implementation and bind others to it. Considering I’ve written this parser in C#, F#, JS, and most recently Rust – anything that cuts down on maintenance work is desirable.

My mind shifted to the JS implementation. Rust can compile to Wasm and both nodejs and browsers can execute Wasm, so rewriting the JS parser to delegate to Wasm seemed like the first step in determining how feasible it is to have all logic contained in Rust.

I should note that .NET languages can utilize Wasmtime to run Wasm, but this is not as mature. I wanted to start prototyping Wasm on the most mature platforms. Part of this process was to test if Wasm is too immature for a given ecosystem. In a fledgling Wasm ecosystem one could still be able to dip down to interoperating with native libraries (though native libraries have their own tradeoffs).

Rust Wasm and the JS Wrapper

This won’t be a tutorial on Rust and tools (wasm-bindgen and wasm-pack). I suggest reading their respective books and tutorials.

My view is that one should always write a JS wrapper for the Wasm that exposes the Rust code as idiomatically as possible instead of making the Wasm a separate NPM package. To me, an idiomatic design means the loading and compiling of Wasm is entirely user driven. For instance, I have users create instances through a static initialize function that transparently handles the wasm.

import init from "./pkg/jomini_js";

import the_wasm from "./pkg/jomini_js_bg.wasm";

let initialized = false;

export class Jomini {

private constructor() {}

/* snip member functions that use wasm functions */

public static initialize = async () => {

if (!initialized) {

// @ts-ignore

await init(the_wasm());

initialized = true;

}

return new Jomini();

};

}

Notice that the wasm is cached on subsequent initialize invocations. Small Wasm bundles can still incur a non-negligible performance tax (50 kb compiles in 20ms), so cache them.

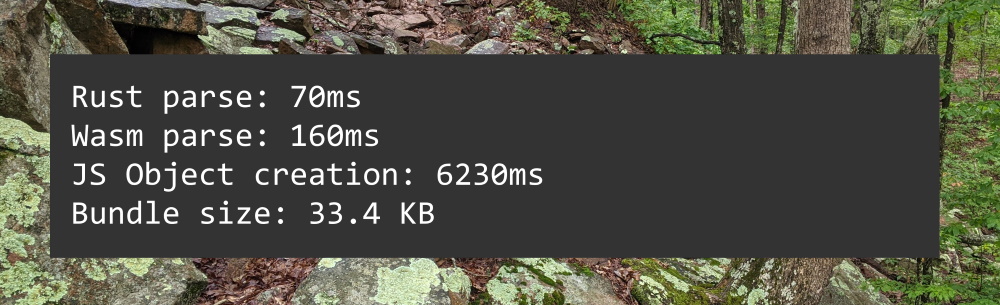

And in case you are curious about performance, it’s great! The Wasm implementation can parse a 1.3 million line file in 160ms. Considering parsing it in Rust takes 70ms, I think this is a great outcome. What might come as a bit of a performance shock is that deserializing the parsed data to a JS object takes over 6 seconds! I find this incredible – object creation can be more expensive than parsing. Expensive object creation isn’t restricted to JS either, python is also afflicted. We can fix this with a bit of ingenuity.

Wasm Lifetimes

The parser is zero-copy, but as shown it can be too expensive to serialize the parsed data to a JS object. The solution is to do less work: have the user specify the data they are interested in ahead of time using JSON pointer syntax. The below example extracts the player and then extracts the prestige field from the player’s country.

const buffer = readFileSync(args[0]);

const parser = await Jomini.initialize();

const { player, prestige } = parser.parseText(

buffer,

{ encoding: "windows1252" },

(query) => {

const player = query.at("/player");

const prestige = query.at(`/countries/${player}/prestige`);

return { player, prestige };

}

);

The alternative would be doing the same operations but on the decoded object.

const buffer = readFileSync(args[0]);

const parser = await Jomini.initialize();

const save = parser.parseText(buffer, { encoding: "windows1252" });

const player = save.player;

const prestige = save.countries[player].prestige;

Querying for just the interesting bits completes 40x faster (6.3s vs 0.16s) and uses about half the memory.

How this query API is accomplished with a zero-copy parser (a struct that has a lifetime to the underlying data) is a bit tricky, as wasm-bindgen doesn’t let one expose a struct with a lifetime. The solution looks as follows:

#[wasm_bindgen]

pub struct Query {

_backing_data: Vec<u8>,

tape: TextTape<'static>,

}

This struct works as desired through a two pronged approach:

- We transmute away the lifetime of

tapeto static so that we can store it in a wasm-bindgen compatible struct. This is the onlyunsafeoperation – and it has to be unsafe as we’re exposing this to JS and Rust can’t borrow check JS usage. - It’s important to own the data that was parsed so that our referenced data isn’t reclaimed.

This is also why the API is exposed in a callback, so that the user extracts the data they are interested in and at the end of the callback, Query is freed (a method generated by wasm-bindgen on exposed structs) for the user so they don’t have to worry about manual memory management. This goes back to ensuring that users have an idiomatic API at their disposal.

Bundling

This will be the most opinionated section here. I prefer projects with a small number of dev dependencies and a minimal amount of configuration.

Here’s the build step from package.json: we manually call wasm-pack and remove any previous invocations

{

"build": "wasm-pack build -t web --out-dir ../src/pkg crate && rm -rf dist/ && rollup -c"

}

And the rollup config is also simplistic

import { wasm } from "@rollup/plugin-wasm";

import typescript from "@rollup/plugin-typescript";

const rolls = (fmt) => ({

input: "src/index.ts",

output: {

dir: `dist/${fmt}`,

format: fmt,

name: "jomini",

},

plugins: [

wasm({ maxFileSize: 100000 }),

typescript({ outDir: `dist/${fmt}` }),

],

});

export default [rolls("cjs"), rolls("es")];

Rollup is used as it’s the best tool to generate both CommonJS and ES modules. CommonJS for the nodejs folks and ES modules for those using bundlers that can take advantage of tree shaking to remove unused code. The tree shaking is partially limited by the support code that the Wasm bundle executes on load (ie: setting up heap), but this shouldn’t be too restrictive especially if the main API of a library uses Wasm. Make sure that your package.json contains the appropriate references to the modules and types:

{

"main": "./dist/cjs/index.js",

"module": "./dist/es/index.js",

"types": "./dist/es/lib/index.d.ts",

"files": ["dist"]

}

For those curious, I’ve eschewed the @wasm-tool/rollup-plugin-rust package as sometimes introducing a middleman doesn’t significantly cut down on code and it introduces another layer to debug when things go wrong.

It’s easy to miss in the config snippet, but the below function inlines our Wasm as a base64 encoded string if our Wasm is less than 100kb (an arbitrarily chosen number that is larger than our bundle)

wasm({ maxFileSize: 100000 })

The Wasm itself is still compiled asynchronously. If we omitted maxFileSize, then the Wasm file would be generated as a complementary file. An additional file that has to be fetched is not desirable, as it would be surprising behavior for clients who may not be aware of all the implications from direct or transitive dependencies (ie: they may need a build system that understands Wasm if they are deploying to the web). By inlining as base64 we make the Wasm an implementation detail (for node 12+ and the vast majority of browser users). Yes, base64 does cause the Wasm bundle to grow ~33% but this is offset by two factors: compression, so the cost of transferring across the network is less than 33%, and the Wasm bundles generated by Rust tend to be small. As long as one stays cognizant of file sizes this shouldn’t be an issue. For me, the generated Wasm bundle is 54 KB and bundlephobia lists the total bundle size (JS + inlined base64 Wasm) as 33.4 KB after minification and compression, which is smaller than react-dom (35.6 KB). In my opinion this price is easily justified. If the Wasm bundle is significantly larger, I suspect the likelihood of clients being aware of their dependency implications increases, so one can opt to supply the Wasm bundle as a separate file.

Finishing Touches

We’re close to wrapping up the library. At this point you can run unit tests via ava. So far, ava outshines jest and mocha + co as it’s only a single and relative small dependency to install and I can immediately start writing unit tests afterwards with no configuration. These unit tests are written in JS that import from the dist directory so I don’t need to install additional dev dependencies or waste anymore time on configuration.

The unit tests confirm that our nodejs module is working but if we were to import our library into webpack we’d receive an error as auto generated js glue contains import.meta.url and webpack will choke on this statement until webpack 5 is released. So until webpack 5 is released, which should hopefully be soon as I see they have v5.0.0 release candidates, we will need to manually remove this line from the glue code.

The last thing we need to do is expose the typescript definitions that wasm-bindgen generated. The fix involves copying these definitions to the output directory and for all the references to work we need to make sure that wasm-pack outputs to a directory beneath src (this is shown in the package.json build step posted above). You’d think that Typescript would have a flag for copying over declaration files but it explicitly doesn’t.

To accomplish those two things we append our own custom rollup plugin to the plugins already used (wasm and typescript):

[

wasm({ maxFileSize: 100000 }),

typescript({ outDir: `dist/${fmt}` }),

{

// We create our own rollup plugin that copies the typescript definitions that

// wasm-bindgen creates into the distribution so that downstream users can benefit

// from documentation on the rust code

name: "copy-pkg",

generateBundle() {

// Remove the `import` bundler directive that wasm-bindgen spits out as webpack

// doesn't understand that directive yet

const data = fs.readFileSync(path.resolve(`src/pkg/jomini_js.js`), 'utf8');

fs.writeFileSync(path.resolve(`src/pkg/jomini_js.js`), data.replace('import.meta.url', 'input'));

fs.mkdirSync(path.resolve(`dist/${fmt}/pkg`), { recursive: true });

fs.copyFileSync(

path.resolve("./src/pkg/jomini_js.d.ts"),

path.resolve(`dist/${fmt}/pkg/jomini_js.d.ts`)

);

},

},

]

So a bit of bummer that we doubled the amount of configuration in the last stage. This is where one starts debating if adding an additional plugin to handle edge cases starts to become worth it, and I can totally see that (hence why I said this is the opinionated section).

Conclusion

A library for JS written in Rust can be:

- faster thanks to Wasm performance

- small thanks to small Wasm bundles produced by wasm-bindgen and Rust

- quicker to develop as the logic doesn’t need to be ported

- typescript friendly

- deployed server side and in the browser

- an implementation detail for browser users when inlined as base64

And along the way I learned how expensive object creation can be.

I am not advocating for all JS libraries to all of a sudden start using Wasm, but for those who have projects that need to find a sweet spot of performance (remember to profile!), small bundles, and lowered maintenance from a common core – there aren’t too many downsides with JS libraries cemented by Rust + Wasm.

Comments

If you'd like to leave a comment, please email [email protected]