Optimizing Code and Why We Should All be Language Agnostic

Published on:Donald Knuth has been famously quoted as saying:

We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil. Yet we should not pass up on our opportunities in that critical 3% (pp. 268).

Knuth, D. E. (1974). Structured Programming with go to Statements. ACM Computing Surveys, 6, 261–301.

Michael Abrash also shares a similar opinion:

All too many programmers think that assembly language, or the right compiler, or a particular high-level language, or a certain design approach is the answer to creating high-performance code. (pp. 6)

Know when optimization matters—and then optimize when it does! (pp. 15)

Abrash, M. (1997). Michael Abrash’s Graphics Programming Black Book. Coriolis Group.

What do these quotes have in common? They all deal with my obsession with fastest possible code. Seriously! I am afflicted with a disease that makes me constantly think “How can I make this code more efficient?”. Tools like Visual Studio’s Performance Explorer with their ease of use aren’t making it any better. If anything they are making my condition worse because these tools work. With their automatic highlighting of where the CPU spends most of the time, it’s as if they are handing me slow code on a silver platter asking for me to fix it. I can’t refuse!

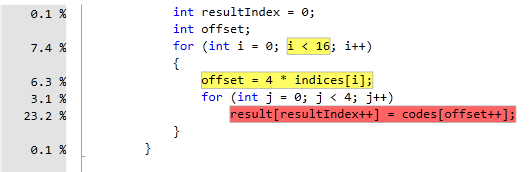

Visual Studio Profiler showing where time is spent in my code

You have no idea how long I have stared at this trying to figure out a way to make this faster. How on earth can a closing bracket be 0.1% of the CPU time…ahhhh… it may be time for loop unrolling

Back on topic. Almost all programmers know the “premature optimization is the root of all evil” fragment of Knuth’s book, which can be very misleading. Knuth was talking about the noncritical aspects of our programs, the code that is executed a few a times. Who cares if you wrote verbose code for the initialization of your program? No one. Both authors stress that once you have a finished a working prototype, then you’re allowed to go over your code and profile it. If you try to optimize every line of code you write as you write it, your project will never get completed, as your company will fire you for taking up too many resources and instead hire an intern to write quick, albeit bug ridden, code. You don’t want to let that happen.

I personally have been afflicted with premature optimization. Following is code that I have used in production. For those wondering, it is an often executed piece of code. Can you spot what is wrong?

private int _totalLoss;

public int TotalLoss

{

get { return (_totalLoss != 0) ?

_totalLoss :

_totalLoss = firstLoss + secondLoss; }

}

If you didn’t spot what is wrong, then you and I would have been in the same

boat for a year. But for those that are familiar with patterns will recognize

horribly executed Lazy Initialization. The goal of the pattern is to

instantiate costly variables that may or may not be used at a later date. Last

time I checked, ints weren’t costly, and instead of using the

proper technique of Nullables, my code assumes that an int is unitialized if it has

a value of zero. If the result of the summation was zero, the code would

repeatedly perform needless conditional and assignment operations. Why did I

write such horrible code? I was on an optimizing rampage, refactoring this and

that without profiling. I had a grand thought in my head that what I was doing

was making a difference. What was the result of my destruction? Nothing but

wasted time. I could have spent my time better if I had mowed the lawn, washed

the cars, and gotten some fresh air. Instead the black hole that is premature

optimization swallowed me whole. Because of that experience I have a rule of

thumb I go by and Abrash puts it succinctly:

high-performance code shouldn’t get in the user’s way—and that’s all.

It pains me to say this, but Abrash is telling everyone that programming isn’t a

competition to see who can write the most optimized code. If it was, we’d all

be writing FORTRAN, but we don’t and now we reach the main point of the article. It

doesn’t matter the language you chose, you achieve the same results. Nowadays

it is just a matter of how long it takes for implementation and resource

consumption. This is what has given rise to what I deem as Productive Languages,

such as C#, Java, MATLAB, and any language that sits on a large framework.

These languages allow a single programmer to write equivalent code in much less

space and time than lower level languages. Take for instance the fast Fourier

transformation. Writing it in C++

would take most the day, but in MATLAB it is as simple as the function call

fft().

Now comes the horde of computer science majors who will run up to me, look at their shoes, and awkwardly stammer, “MATLAB slow. C++ fast”, which I will disagree with them. I claim that C++ is slow, assembly language is slow, and every language that exists is slow. It is not the language that determines the speed of execution of a program, it’s the design of the program and algorithms used. Try as you might, but your bubble sort assembly code will be slower than my Python quick-sort. A compiler or interpreter can’t read our minds. We can’t say, “Here’s a list of numbers and sort it in the most efficient manner possible,” and then depending on the number of items in the list, how they are ordered, and the architecture of the computer, have specific machine code spat out that satisfies the constraint. But until that day, programmers will continue to sort their favorite way.

Saying this, what if, after finishing your program, you have identified slow code that is optimized as far as your language and design allow, what do you do? First, recheck your design then if you still have a problem and you use a static language, then you can always go to C, or if you’re adventurous, use assembly. It may seem like I’m contradicting myself, but I’m not. In a lower level language, while there is more room for sloppiness, there is also more room for optimization. According to Knuth, we have found our 3% and we know that this needs optimizing, so it could prove beneficial to write code in a language that is closer to the hardware. Notice that I said it could prove beneficial, as the assembly code written could use a more naive method and be slower. It all boils down to how much one knows about their language. Ideally, a master of his or her language won’t have to think about optimization because their code will be fast enough on the initial write. Again, we’re not going for the most efficient code ever written, and this is where I have a problem and I’m trying to overcome this.

I believe we should all be agnostic when it comes to languages and optimization. Instead all programmers should focus on getting consumers a product that is bug free and appears fast.

For all those C/C++ fans out there who thought this article didn’t apply to them, here is a nice quote from Bjarne Stroustrup, who said in his book The Design and Evolution of C++:

It would be nice if every kind of numeric software could be written in C++ without loss of efficiency, but unless something can be found that achieves this without compromising the C++ type system, it may be preferable to rely on Fortran, assembler, or architecture-specific extensions.

By the way, in the code that was posted where I posed the question, how can this be improved, I was able to work some magic using bit manipulation, loop unrolling, and variable elimination to gain an 8% boost. If you had to optimize that code, how would you do it?

Comments

If you'd like to leave a comment, please email [email protected]

C# for loop structure of C# is like an all-in-one looping utility. The C# for loop acts like the while loop only with some extra features. c# for