Initial Impressions of a Website with Modern Build Tools

Published on:Table of Contents

Introduction

No one can deny that Javascript has significantly changed in the last few years. If you were a Javascript master in 2012 and lived under a rock for the last few years, you’d be shocked to see what the community had evolved into. Since I’ve been at least dabbling in Javascript since 2012, I thought I’d give my view on this topic. To give a fair and proper evaluation of the current state of affairs in Javascript, it’s best to first look where I’ve (we’ve) been.

First Encounter

I remember the first day I touched Javascript because as part of my freshman internship I had to keep a log of what I did each day. On May 7th 2012, I wrote:

Bug fixing. Finished fundamental Javascript tutorial. Started jQuery Tutorial.

The tutorial mentioned was a Pluralsight

course I had

signed up for with a temporary free account due to my edu email address. As an

aside: the url contains ‘jscript-fundamentals’ and

JScript is not to be confused with

JavaScript!

As an intern I was tasked with prototyping a web portal for engineers, and by the end of the

summer, I had fully working version. Along the way I recorded some gold nuggets

in the work log such as “Learning about KnockoutJS”,

“Exploration of

MVVM”,

“Code refactor into KendoUI”. I can’t

remember what I used to bundle the application because over a year later we see

“Start of […] javascript upgrade” and “build process for js preprocessing”,

where I finally start using RequireJS and

Grunt. A dive into commit history showed previously an

html file with dozens of <script> tags (does anyone remember

json2?) referencing my

javascript files along with comments like:

It’s absolutely critical that one puts foo.js before bar.js

So no matter how bad one may think the Javascript ecosystem looks, just remember where we’re coming from.

So in mid-2013, the code below was state of the art.

define([

"jquery",

"foobar"

], function($, foobar) {

"use strict";

$.ajax({ /* ... */ });

// enter usage of the revealing module pattern

});

I remember when the revealing module pattern was a big deal and was all the rage for writing composable javascript. It’s hard to think that nowadays I hardly use traditional javascript modular patterns. Javascript has changed that much, which is both life saver and a scary thought.

Despite all the shortcomings a new hip Javascript programmer might have to say about the application, it is very much still in production today and has been for the past 3½ years with little patching needed. How many people get to say that about a web app?

Forget the bragging. Other frameworks blow the ad-hoc one I created out of the water and I’ve always been on the lookout.

KnockoutJS and D3

While I had only read briefly about KnockoutJS before, Christmas break 2013 allowed me time to dive deeper into the library. Coming from a WPF MVVM background, Knockout’s slogan stood out, “Simplify dynamic JavaScript UIs with the Model-View-View Model (MVVM)”.

The project was to improve the University of Michigan’s Incident Log, which did not even look as good as it is now, so that users could see the incidents on a map along with cool, but purposeless statistics. The result is umich.nbsoftsolutions.com. You’ll notice that it is no longer working because the University upgraded their website so my custom scraper using BeautifulSoup broke.

For the frontend, there were about ten different script tags from

CDNs like

cdnjs, which had promises that if your site served assets

previously seen by a visitor, the visitor could use their cached version. A

couple restrictions applied, which limited the usefulness. The URL had to be

the same, so the asset had to be from cdnjs and the library version had to be

the same. On the positive side, it could lighten server resources and load the

site faster if the CDN harnessed geolocation to serve assets from the server

closest to the visitor.

Each page had it’s own Javascript file written in Immediately-invoked function expression. Thankfully, since the site had a narrow scope, all the functionality could be packed into a single file without too much copying and pasting between pages.

I wrote a JSON API in Flask as opposed to what I had known at the time, Bottle. For me, Flask > Bottle, but Bottle still has its uses. I don’t understand why Bottle is contained in a single large file. Seems brittle.

What cracks me up is a comment I have in my Flask API about sending a certain JSON payload:

# Not to mention sending statistics is, by far, the largest contributor of

# bandwidth at 16KB uncompressed.

Not sure why I was worried about 16KB uncompressed whereas websites today are much more bloated. Wikipedia uses 50KB of Javascript. Bissell has a 1000KB of javascript and styles. This graph shows that 363KB was the average amount of Javascript for the top 10,000 pages in February 2015. The Average Page is a Myth has the median around 400KB in 2015. And hot off the press, The average size of Web pages is now the average size of a Doom install. You know what webpage doesn’t use any Javascript? https://nbsoftsolutions.com :)

So worrying about the 16KB of data is being paranoid.

D3, the data visualization library, was the most outside my comfort zone. If

it wasn’t for Mike Bostock and his countless examples (like how I repurposed

this one) I probably would not have

succeeded. I had the hardest time wrapping my head around the enter function.

Despite my unexpected learning hump D3 is one of the few libraries (some may

even call it a framework) that is still timeless, as I have a project that uses

D3 for its math and path calculations, but less on direct DOM manipulations.

I’m excited that D3 is planned to be broken up into

modules!

Ember

I’ve been a closet fan of Ember since at least

v1.6.0 (Summer of

2014). I remember v1.6.0 so distinctly because I had just started an

internship at a hedgefund, and my internship project begged for something like

Ember. I pleaded my case if only to satiate my drive to learn and try my hand

at web development (who cares if an intern fails!?). My wish was not granted

(more like crushed!), so I fell back to reading blog posts and following

releases while I waited for a side project where I could use Ember.

For someone on the outside, the appeal of Ember is it’s community. It seemed so well put together – not driven by some mega corporation with dubious intentions. The people behind are brilliant, Tom Dale and Yehuda Katz to name a few. It was a community I wanted to be a part of.

Finally in March of 2015, I got an excuse to start using Ember. A few months earlier I had sat next to a fascinating statistician on an train from Chicago to Ann Arbor. With little to no programming experience I was able to explain to him multi-threaded and synchronization issues with airplanes sharing a runway analogy and he grokked it far better than I expected. (Shortly after, I wrote Await Async in C# and Why Synchrony is Here to Stay and C# and Threading: Explicit Synchronization isn’t Always Needed). My new friend, in March 2015, had just arrived in Africa and needed a website where African startups could aggregate their data for potential investors.

With little requirements and my imagination to go on, I started hacking away. I volunteered myself for weekly updates to hold me accountable but also have a steady form of communication. Initially, this worked great and progress was made; however this trend did not last. I started stalling on Ember itself. The community was at an awkward pass with Ember 1.x and 2.x, there was movement to migrate to a “pod” directory structure, Ember CLI was in constant churn and had notoriously bad performance on Windows, and the Ember libraries I wanted to incorporate always seemed to be waiting for the next release to change their API. Perhaps not surprisingly, about a month of development my enthusiasm ran out of steam. My updates became repeatedly shorter and my friend soon became silent.

It’s for the best that I stopped working on the project because I was going to be reinventing a CMS, and nobody wants to be stuck with that task.

Most of the project, at the time that I stopped working on it, consisted of handlebar templates and CSS. The most interesting Javascript written dealt with validations of a form submission, but it does highlight the usage of Promises:

if (this.get('isValid')) {

var promise = this.get('model').save();

callback(promise);

promise.then(startup =>

this.transitionToRoute('startups.show', startup));

} else {

this.set('errorMessage', true);

callback(new Ember.RSVP.Promise((fulfill) => {

fulfill();

}));

}

A Node Diversion

We’re almost to the main point of the article, I swear, but I must quickly mention Node. Up until this point I had written no tests for any Javascript I had penned, which is crazy. Two years of Javascript and not a test written. If you know me, this should be shocking. I tend to emphasize testing. My excuse would be that it’s incredibly hard to test client side Javascript, as it’s fickle and requires some sort of browser to run.

To remedy this testing deficiency, I created a library to parse a file format. I had high hopes that I’d be able to incorporate it in browsers or a node based app. Unfortunately, parsing a 50MB file using Jison, Javascript’s version of Bison, would exhaust V8’s heap space. Handrolling a version that would allow streaming failed because it’s notoriously hard to parse a stream in an asynchronous environment. Just take a look at jsonparse, the streaming JSON parser, look me straight in the eyes, and say that writing a state machine like that for a format more difficult would be a task you’d relish.

The project did serve as a good training ground for Gulp, CommonJS, and Mocha. With Mocha, I was able to finally write tests like the following:

var parse = require('../lib/jomini').parse;

var expect = require('chai').expect;

describe('parse', function() {

it('should handle the simple parse case', function() {

expect(parse('foo=bar')).to.deep.equal({foo: 'bar'});

});

});

These tests would then be executed in a Node environment. I briefly tried to see if the tests would execute in a browser environment but was unable to (this is solved with a compilation step discussed later).

Enter 2016

Several weeks ago, while updating some of my sites to Let’s Encrypt, I found out that the University of Michigan updated their Incident Log (mentioned earlier in the post) to expose a JSON endpoint of the incidents on a given day. The problem is that the endpoint would take several seconds to respond and the interface was lacking. There was no cool, but purposeless statistics! This presented the perfect learning opportunity opportunity, as I had recently read State of the Art JavaScript in 2016, and wanted to try out the technologies it mentioned.

One of the libraries was React and my only familiarity with React was when I dabbled in it for an Electron side project and I realized that the most popular UI framework for Electron was React.

Below are my impressions on using some of the technologies listed in the post.

Modern Code

Thanks to Babel one can use Javascript concepts that won’t be natively supported for years to come. Below are some code snippets from the actual site that demonstrate their usefulness. For more information on the future of Javascript, see ES6Features.

List destructuring, and new variable keywords:

// Get all the data before and after a date split into two lists

const [bf, af] = partition(data, (x) => moment(x.date).isBefore(date, 'day'));

Object destructoring, arrow functions, and string templating:

const Map = ({ address }) => {

const url = '//maps.googleapis.com/maps/api/staticmap';

const size = '250x250';

const src = `${url}?size=${size}&markers=${escape(`|${address}, Ann Arbor, MI`)}`;

return <img src={src} />;

};

import React, { Component, PropTypes } from 'react';

import ReactCSSTransitionGroup from 'react-addons-css-transition-group';

import Map from './Map';

ES7 async/await with enhanced object literals:

export function fetchPostsIfNeeded() {

return async (dispatch) => {

// Get the last time the data was updated

const lastUpdate = await localforage.getItem('last-update');

// If the data has never been updated or the data hasn't been updated

// in a day, update the data by sending a request to the backend

const data = !lastUpdate || !moment().isSame(lastUpdate, 'day') ?

await fetchData(dispatch) :

await localforage.getItem('data');

// Let the frontend know the data

dispatch({ type: REQUEST_DPS_DONE, data });

};

}

I really enjoy these features and going back to previous style of Javascript would definitely take a shot at my productivity and enthusiasm.

The Cost

With all the cool libraries and modern features, there is a cost. Right now, udps.nbsoftsolutions.com sends 309KB of Javascript to the client (gzipped and minimized). While less than the average site, my side project does not nearly have as many features as other sites. Not to mention it is much easier to visualize the payload growing as features are added. For instance, I plan on adding neat graphs and that will involve d3, an additional 53KB gzipped and minified worth of Javascript. Since no business value is being derived from the site, I don’t mind so much. There are ways to circumvent this issue, like code splitting so that not all the js is contained in a single file.

CSS

Playing with CSS in this project blew my mind. I would previously put all my LESS or CSS in a single file to reference from HTML, but it is now possible, and some may even recommend in this componentized world, to create a CSS file for each component. Traditionally, this would have resulted in many files or a possibility for class names that would conflict across components.

Enter cssnext. Let’s say we create a file called

About.css:

@import '../../css/Main.css';

.textual {

margin: 0 auto;

& p, & li {

font-size: 1.25rem;

line-height: 1.4;

}

}

Those familiar with other CSS preprocessors like LESS or SASS, this will seem familiar:

- Copy and paste contents of Main.css into said file

- Create a class named

textual - any paragraph (

p) or (li) directly under an element withtextualhas modified text properties

How do we use About.css? In About.js we can import our CSS and access the

textual property.

import React, { Component } from 'react';

import styles from './About.css';

export default class About extends Component {

render() {

return <div className={styles.textual} />;

}

}

This is CSS Modules and it is critical that in the javascript code to reference

them through the property name styles.textual and not the class’ literal name

'textual' because CSS Modules hashes the class names so there is no chance of

collisions of classes with the same name on different components.

An interesting fact – I’ve yet to determine if it’s a net benefit or loss – is that, by default, the CSS is bundled with the Javascript, so there is no longer the main HTML file referencing both a CSS file and a Javascript file. Now it only references a Javascript file with styles contained within. With HTTP2 slowly being rolled out with multiplexing, the benefit of a single request diminishes but the argument can be made that cognitive load is still decreased.

Testing

An adequate testing story is lacking, especially for frontend with a build chain such as ours. I’m extremely familiar with unit testing and frameworks in other languages, yet it took me an entire weekend to get a sane testing plan down. I very nearly called it quits. First, I started easy, using only Mocha, Chai, and expect to test pure javascript (non-react/redux/dom). It was easy enough to throw in the babel compiler into mocha so I could also write tests in ES6/7.

The problem arises when we start testing React components. Don’t worry, I hear everyone is moving to Enzyme, so I prepared it, installed it, and cracked my knuckles. Baby steps, let’s start with the basic component:

import React from 'react';

import styles from './Footer.css';

const Footer = () => {

const footmsg = 'Made with \u2764 by ';

return (

<footer className={styles.footer}>

<p>{footmsg}

<a href="https://nbsoftsolutions.com">

Nick Babcock

</a>

2016

</p>

</footer>

);

};

export default Footer;

And the corresponding baby test for our baby component

import React from 'react';

import { expect } from 'chai';

import { shallow } from 'enzyme';

import Footer from '../js/components/Footer';

describe('<Footer />', function () {

it('should give credit to the original author', function () {

const wrapper = shallow(<Footer />);

expect(wrapper.text()).to.contain('Nick Babcock');

});

});

This test will actually fail to compile. Do you know why? If you guessed CSS

Modules, you’d be correct. Mocha and babel don’t know how to deal with css (and

why should they)! A little bit of googling leads us to writing a setup.js

require script for mocha:

import hook from 'css-modules-require-hook';

hook({ extensions: ['.css'] });

Tests now passing!

Unfortunately, this euphoric feeling soon passed. Take a look at the next component. Based on the last showcase, this one should be easier to guess what the problem is.

import React, { Component } from 'react';

import Message from './About.md';

export default class About extends Component {

markup() {

return { __html: Message };

}

render() {

return (

<div dangerouslySetInnerHTML={this.markup()} />

);

}

}

Ah, we’re importing a markdown module. Same issue as last time, except this

time no one has written a ‘markdown-require-hook’. Pondering this thought for a

couple hours made me realize that I may have been going down the wrong path

because I didn’t want to add a new require dependency for the tests every

time it’s needed in the source.

Maybe front end tests need something more – maybe they need to be webpack-ed

as well to run in a browser-like environment. I’m familiar with the headless

browser, PhantomJS, and

mocha-phantomjs seems

popular enough, so combine the two? No. Wrong path. Let me save you time, and

point you to the webpack documentation on

testing (obvious in hindsight

isn’t it?). There it mentions Karma, which is

a self described “Spectacular Test Runner for Javascript”, and it did not

disappoint. There was enough internet documentation (blogs, github) that I

pieced together a karma.conf.js that worked! It’s clear skies from here!

Well, no, not exactly. There may be a series of steps one needs to take as they dive more into testing components extensively. For instance, when the component tests needed a real DOM, compilation errors started cropping up and the only fix was advice from the following Github thread. The fix was to add more info to my config files, which I’m basically copying and pasting blindly into. Luckily, it worked, but crawling Github/stackoverflow for solutions to copy and paste seems worrying.

On a different note, a pleasant surprise with our setup is that our CSS Modules

are compiled the same way so we can use styles.foobar to reference the

compiled name!

Benchmarking

It is important for any application to have benchmarking because it ensures that the hot spots in your code base don’t slow your application down enough for the user to notice. When I deployed the first version of the site, I noticed significant lag between when the user would select a date with no incidents and the suggestion text popping up for a better date.

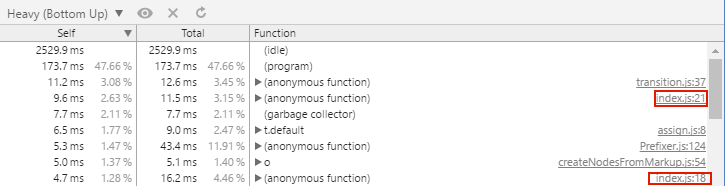

When faced with slower than expected code, the first step is to profile the production code to find what the bottleneck is. Don’t guess and don’t use development code. Most browsers have profiling tools that will indicate code that needs improvement. Below is a screenshot of Chrome’s profiling tool where optimizations are not needed:

Profiling in Chrome

The profiling tool doesn’t present helpful function names, but it contains a link to the source mapped source code, which will help track down the issue

There is no need for optimizations because the code is idling for most the

time, but it wasn’t always like this. When I noticed the lag in the site, the

profiling showed a lot of time was lost in calling

momentjs functions. Now it’s time for the

optimizations.

If there is one de factor benchmarking library for Javascript, it’s Benchmark.js. Before you get too far, Benchmark.js does not play well with Webpack, so we’ll have to settle with running our benchmarking on Node instead of browser, which isn’t too hard to stomach. The one tricky aspect is our code is written in ES6/ES7, but Node doesn’t support all the new standard. We could have an intermediate step where we run the code throgh Babel and then run it through Node, or the easier way is just use babel-node, which will eliminate the intermediate step.

One thing that I learned through benchmarking is that moment.js, for all of it’s ergnomics, is an extremely slow library and one is much better off writing their own functions. In fact, the benchmarks show that the new method is nearly two orders of magnitude faster than the equivalent moment.js function.

Conclusion

Using the React view, Redux state management, Material UI, CSS Modules accompanying cssnext features through PostCSS, with modern Javascript compiled with Babel, ran through webpack, which also is invoked through the Karma test runner targeting PhantomJS for Mocha tests that assert with chai/expect to ensure our components handled by Enzyme represent state correctly, while other entities are mocked through sinon, is mouthful, but is doable, and now that I have been through the pain, I would say that I’d do over again especially the more complex the site became. I think if enough people became opinionated about this setup, then it could be more tightly bundled and allow for an easier time for new developers to get started with it.

Comments

If you'd like to leave a comment, please email [email protected]