ENGR151++: Google AI Challenge

Published on:At the University of Michigan, all first year engineers have to take an intro to

a computer and programming class, normally ENGR101, which involves C++ and

MATLAB. However, for those who have previous experience or have an expressed interest in programming

then one can opt for ENGR151 to take its place, which is an accelerated

introduction. I logically chose ENGR 151, and the parts that weren’t

programming, such as logic gates and two’s complement presented a challenge.

When the first midterm arrived, the professor said that for those interested

and scored well enough, there would be an opportunity to participate in an

ENGR151++ group. Joining the group meant that you were exempt from all

homework, the second midterm, and the final, but you had to

spend every waking moment dedicated to the group.

Short story: I scored well enough and wanted to join, so I did along with eleven others. We split into two polar groups, one dealing with hardware and the other with software. If I remember correctly, the hardware team wanted to design a hovercraft that could be controlled and displayed in an HTML5 browser, while the software team, which I joined, decided to participate in Google’s AI Challenge. which was called Ants. It was a turned based game where your bot and others explored the world gathering food, colliding with other ants, and closing opponents’ hills.

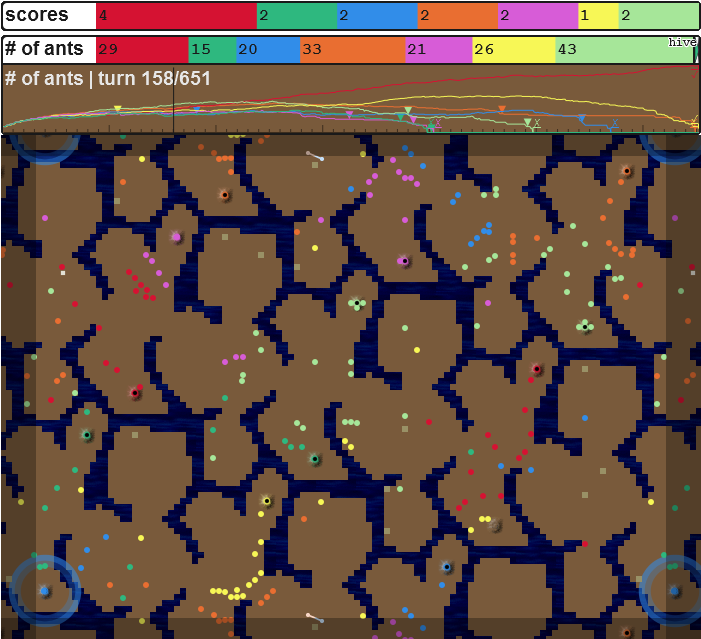

Sample Ants Game

The ants are the little circles, food are the greyish squares, blue is water, and the colored holes are ant hills. To kill an enemy ant, one must have a greater number of ants within an attack radius else both ants die. Not shown in this picture is fog of war. The match ended if all enemy hills were closed, no food was being gathered, or if no matter the outcome one’s ranking wouldn’t change if the match continued.

Three times a week we had “lecture”, which consisted of the six of us (oftentimes less than that) meeting in a small room with our ENGR151 instructional aide, talking about basic algorithms and artificial intelligence. It was a lax environment as the competition encompassed the world and among our competitors were video game companies, doctoral candidates, and those who have had years of experience programming. Needless to say we didn’t have high hopes, considering our lack of skills, the competition had started a month before we had even heard of it, and the deadline was just a month away. This all changed when one of us submitted a test bot using the most basic algorithm of graph theory, breadth first search, for navigating the map and he achieved a top ranking about 200th out of 7900 participants, according to the TruSkill Ranking System

This kicked us into high gear. Our goal was to finish in the top hundred. We split up into different roles based on our strengths. I volunteered to design the data structures and world algorithms our ants would rely on. After I achieved a memory efficient flag class for individual ants with bit manipulation, I decided to research into more advanced algorithms. I ended up replacing our breadth first search with A* and the Manhattan distance we used for calculating distance between two objects with a modified Bresenham line algorithm . It was this point in the competition where our bot would start to timeout - we were taking too long to make a decision so Google automatically forfeited us. I couldn’t believe it as I had written those algorithm with speed in mind. I had even used the Bresenham with MAAV, showing it was 32 times faster than algorithm we were using previously with OpenCV My team wanted to revert back to the simpler algorithms but I stubbornly held my ground while I desperately searched for a speed up. I have no doubt this annoyed them. I admit, I wish I had listened to them earlier so that I could have focused on something else, as in the end we used the same basic algorithms we started with.

A couple of days before the official tournament began and the semester ended, we presented to the class what we had been doing skipping out on class. After that, we put finishing touches on our bot and submitted it. Before the tournament began and all rankings reset, we had reached an amazing 17th place. Once the tournament did start, I would constantly be checking how our bot was faring. Games were six hours apart and I would sit and watch them like an engaging movie. Sometimes my hall mates even joined in. It’s quite riveting watching virtual ants essentially battle to the death. Meanwhile, the semester had ended and everyone went back to where they were from, except our team still had a paper that was due to our professor, detailing problems we had come across and their solutions. Thankfully Skype allowed for free international communications and we finished the paper Google Docs style.

I am happy to announce that our bot, BigBadassBot ended finishing 43rd out of 7900 submissions. It’s not too shabby for a bunch of freshmen programmers.

A bit of consolidation for myself, but after the competition the major players decided to release their source code and to my surprise they also used the basic breadth first search. Who knew that something so elementary could prove so effective? But then again when path planning has consistently been using 40% of the CPU throughout programming history, I shouldn’t be surprised.

Attached are the write-ups we submitted for our professor.

Comments

If you'd like to leave a comment, please email [email protected]