DEFLATE yourself for faster Rust ZIPs

Published on:Table of Contents

The Rust crate for handling ZIP files, zip-rs is quite flexible. The zip crate is compiled with builtin features to support deflated data (among other compression algorithms). This makes it incredibly easy to hit the ground running for reading and writing zips.

We can disable these builtin features. This may sound undesirable, but in fact, it is my new favorite way to integrate zip functionality into apps I write:

[dependencies]

zip = { version = "0.6.2", default-features = false }

What’s the point in having a zip library that can’t work on encoded data?

So we can do it ourselves.

There are couple reasons for this:

The zip crate is in maintenance mode and is unlikely to receive significant updates. Normally, I’d be crestfallen to be relying on a passively supported library, but the zip crate was written such that one can enable only the core parsing of a zip’s structure. Reducing exposure to only the most central functionality of a crate that is unlikely to change, allows me the confidence to continue using it. The zip crate gives us the tools to decode the data ourselves without imposing an implementation, so I must thank the maintainers that had the foresight to allow for pluggable decoders.

That leads us to the other reason, which is performance and the focus of the rest of the article.

The example we’re working with is a zip file in-memory and we would like to inflate the contents also into memory. This may sound horrifying to memory conscious developers, but this is a pretty realistic representation when working in Wasm in the browser.

For our example, we’ll be benchmarking the builtin DEFLATE with manual implementations leveraging miniz_oxide and libdeflate. So let’s get our dependencies in order:

[dependencies]

libdeflater = { version = "0.10" }

miniz_oxide = { version = "0.6.1" }

anyhow = "1.0" # for convenience

[dependencies.zip]

version = "0.6.2"

default-features = false

features = ["deflate"] # for benchmark

The setup is pretty simple. We’ll have a cli where the first argument chooses how the data from stdin will be deflated. As a side note, the advent of WASI being able to consume stdin opens up a host of deployment options, so my default when writing CLI tools is avoiding file system access to ease Wasm deployments.

fn main() -> anyhow::Result<()> {

let stdin = std::io::stdin();

let mut lock = stdin.lock();

let mut zip_data = Vec::new();

lock.read_to_end(&mut zip_data)?;

let args: Vec<String> = std::env::args().collect();

let inflated = match args[1].as_str() {

"builtin" => builtin_zip(&zip_data),

"miniz" => miniz(&zip_data),

"libdeflate" => libdeflate(&zip_data),

_ => bail!("unrecognized argument"),

}?;

println!("{}", inflated.len());

Ok(())

}

Then we’re going to make a pass over the zip archive and collect basic metadata about the contained files, such as their inflated size and where the contents reside in the stdin data.

The inflated size is collected so we can pre-allocate storage.

#[derive(Debug, Clone, Copy)]

struct FileInfo {

start: usize,

end: usize,

inflated_size: usize,

}

fn archive_info<R: Read + Seek>(archive: &mut zip::ZipArchive<R>) -> anyhow::Result<Vec<FileInfo>> {

let mut info = Vec::with_capacity(archive.len());

for i in 0..archive.len() {

// Use zip's raw function to ignore the compression method for now

let file = archive.by_index_raw(i).context("expected zip file")?;

if file.compression() != zip::CompressionMethod::DEFLATE {

bail!("this test is only for deflated zips");

}

info.push(FileInfo {

start: file.data_start() as usize,

end: (file.data_start() + file.compressed_size()) as usize,

inflated_size: file.size() as usize,

});

}

Ok(info)

}

The first implementation should be the least exciting. It is the builtin functionality of the zip crate.

fn builtin_zip(zip_data: &[u8]) -> anyhow::Result<Vec<u8>> {

let reader = Cursor::new(zip_data);

let mut archive = zip::ZipArchive::new(reader).context("unable to parse zip archive")?;

let info = archive_info(&mut archive)?;

let out_len = info.iter().map(|x| x.inflated_size).sum();

let mut inflated = Vec::with_capacity(out_len);

for i in 0..archive.len() {

let mut file = archive.by_index(i).context("expected zip file")?;

file.read_to_end(&mut inflated)

.context("unable to inflate")?;

}

Ok(inflated)

}

Next comes miniz_oxide. The biggest difference is that we reference the compressed data held from stdin directly, and we need to allocate (instead of pre-allocate) the inflated contents, as miniz_oxide writes directly to the destination.

fn miniz(zip_data: &[u8]) -> anyhow::Result<Vec<u8>> {

/* ... snip same code from builtin ... */

let mut inflated = vec![0u8; out_len];

let mut inflated_start = 0;

for file in &info {

let written = miniz_oxide::inflate::decompress_slice_iter_to_slice(

&mut inflated[inflated_start..],

std::iter::once(&zip_data[file.start..file.end]),

false, /* zlib_header */

false, /* ignore_adler32 */

)

.map_err(|e| anyhow::anyhow!("unable to miniz inflate: {:?}", e))?;

if written != file.inflated_size {

bail!("unexpected number of bytes written");

}

inflated_start += written;

}

Ok(inflated)

}

An underappreciated advantage of this approach is that we only care about the zip archive for a short duration. Transferring an immutable byte slice around is significantly more ergonomic than a mutable reference to a ZipArchive when one has wrapper code around zip file handling.

Finally, libdeflate is almost exactly like miniz_oxide

fn libdeflate(zip_data: &[u8]) -> anyhow::Result<Vec<u8>> {

/* ... snip same code from miniz ... */

for file in &info {

let written = libdeflater::Decompressor::new()

.deflate_decompress(

&zip_data[file.start..file.end],

&mut inflated[inflated_start..],

)

.map_err(|e| anyhow::anyhow!("unable to libdeflate: {}", e))?;

/* ... snip same code from miniz ... */

}

Ok(inflated)

}

After building, we can benchmark with hyperfine

MY_FILE=./my.zip

EXE=./target/release/deflate-yourself

hyperfine --warmup 3 \

"$EXE builtin < $MY_FILE" \

"$EXE miniz < $MY_FILE" \

"$EXE libdeflate < $MY_FILE"

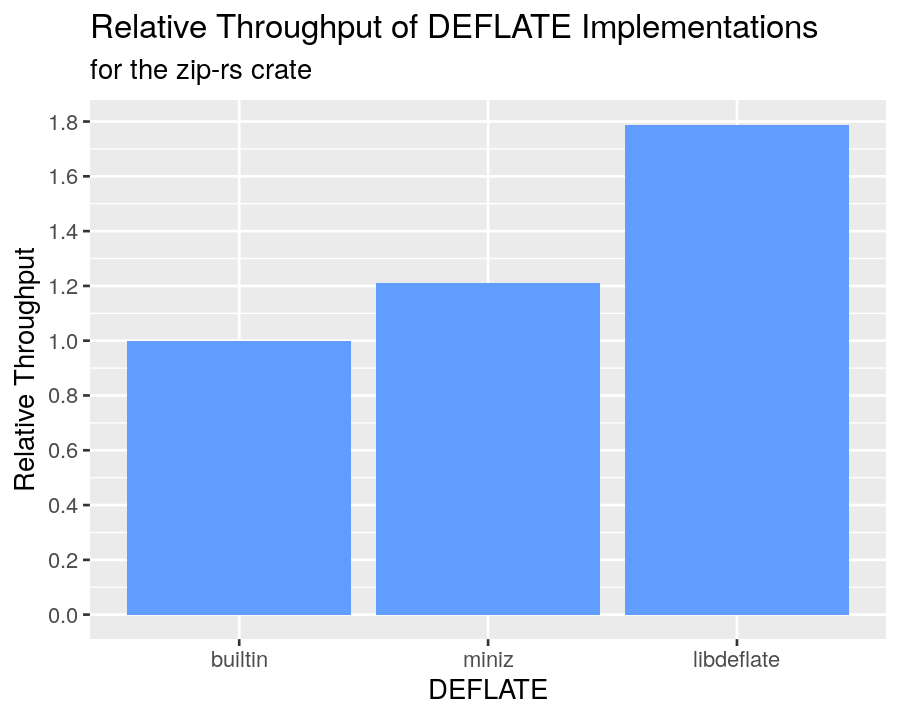

And hyperfine can export csv data that we can wrangle into a nice graph:

Relative throughput of DEFLATE implementations for the zip-rs crate

Takeaways:

- miniz_oxide has 1.2x the throughput of the builtin DEFLATE for zip-rs

- libdeflate has 1.8x the throughput of builtin

Those familiar with the zip crate, know that it relies on flate2 for its DEFLATE implementation, and flate2 defaults to miniz_oxide. So why does using miniz_oxide directly result in a 20% speed up? If I had to hazard a guess, this is measuring the overhead due to a zip-rs asking miniz_oxide to repeatedly operate over a temporary buffer for the Read trait that is a copy of the in memory data.

Since our data is already in memory, there is no need to copy when the data can be referenced directly. Working with an API that forces temporary buffers on in memory data is a good example of identifying a performance pitfall. Thankfully, the creators of the zip crate seem to have recognized this and allowed the workaround seen above, where we use our own inflation method and eke out more performance with the exact same implementation (miniz_oxide, which, by the way is pure Rust, 0 unsafe).

I’m also hoping that the 1.8x lead that libdeflate commands further increases with the recent improvements landed in master due to advancements from DEFLATE performance discussions. And whenever those improvements land, I know I’ll be able to take advantage of them thanks to flexibility afforded by the zip crate.

I love when everything dovetails nicely (Rust’s zero cost ffi with the C library libdeflate, and the zip crate allowing us to plug that in). Is this use case limited? Absolutely, but when an app frequently deals with inflating zips, being able to leverage the fastest method can make an app just a little bit better.

Appendix: the R code used to generate the graph.

library(scales)

library(tidyverse)

library(readr)

get_name <- Vectorize(function(command) {

if (grepl("builtin", command, fixed=TRUE)) {

return("builtin")

} else if (grepl("miniz", command, fixed=TRUE)) {

return("miniz")

} else {

return("libdeflate")

}

})

df <- read_csv("./deflate-yourself-benchmarks.csv")

df <- mutate(df,

name = get_name(command),

throughput = 1 / (mean / last(mean, order_by = mean)),

)

ggplot(df) +

stat_summary(aes(reorder(name, throughput), throughput),

position="dodge2",

geom = "bar",

fill = '#619CFF',

fun = mean) +

expand_limits(y = 0) +

scale_y_continuous(n.breaks = 10) +

xlab("DEFLATE") +

ylab("Relative Throughput") +

ggtitle("Relative Throughput of DEFLATE Implementations",

subtitle = "for the zip-rs crate") +

guides(fill=guide_legend(title="Name"))

ggsave('deflate-yourself-throughput.png', width = 5, height = 4, dpi = 180)

Comments

If you'd like to leave a comment, please email [email protected]