C# Refactoring and Failure

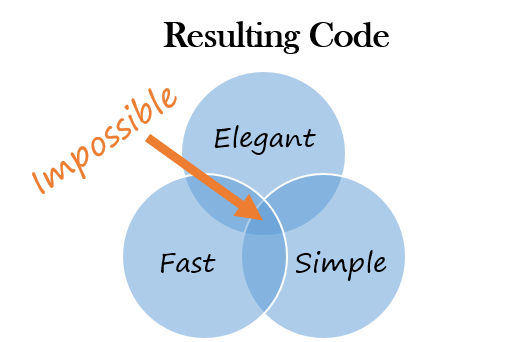

Published on:In the first lecture in an programming class at the University of Michigan, it is taught that it is highly improbable for one to write code that is simple, fast, and elegant. Instead we should strive for one of the categories or two if we’re ambitious.

- Simple:

- few lines of code using basic constructs

- Code can be digested in a short amount of time

- Fast:

- Code has been optimized to run as fast possible either in asymptotic complexity or runtime

- Elegant:

- Code is idiomatic of the language

- Code is beautiful and artful

Venn diagram of how simple, elegant, and fast code is impossible

Being the perfectionist that I am, I strive for my code to reflect all three of those goals. This can be seen in my project Pdoxcl2Sharp. I would describe it as elegant and fast, though far from simple. Since two out of three is bad, I decided to refactor a couple of functions.

Before:

private byte readByte()

{

if (currentPosition == bufferSize)

{

if (!eof)

bufferSize = stream.Read(buffer, 0, BUFFER_SIZE);

currentPosition = 0;

if (bufferSize == 0)

{

eof = true;

return 0;

}

}

return buffer[currentPosition++];

}

After:

private IEnumerable<byte> getBytes()

{

byte[] buffer = new byte[BUFFER_SIZE];

int bufferSize = 0;

do

{

bufferSize = stream.Read(buffer, 0, BUFFER_SIZE);

for (int i = 0; i < bufferSize; i++)

yield return buffer[i];

} while (bufferSize != 0);

eof = true;

yield break;

}

Benefits:

- Fewer lines of code

- Simplicity

- Improved elegance

- Variables defined at the class scope are moved to the functional level

The new code excites me. However, being anal, I profiled the two methods against each other. The new method was twice as slow. Visual Studio said that most of the time was being spent incrementing the enumerator. *Sigh*, an hour of my time wasted.

Why was the new method slower? First off, the actual number of comparisons hasn’t decreased, the conditional simply moves from encompassing additional logic to being used as a loop conditional. If the number of comparisons are equivalent, then what else could be causing the slowdown. I dug through the C# Specification and Jon Skeet’s, C# in Depth but I couldn’t find anything interesting relating to performance. On closer inspection, one can make the logical hypothesis that the refactored is actually more complex, as the read byte is yielded into another collection. The C# compiler abstracts away the complexity by doing all the heavy lifting of setting up an iterator class.

To set up the next refactor. When reading the file the paraser will label bytes if they are important, or leave them as “Untyped” if they aren’t.

Before:

enum LexerToken

{

Equals,

Quote,

Untyped

}

private static LexerToken getToken(byte c)

{

switch (c)

{

case EQUALS:

return LexerToken.Equals;

case QUOTE:

return LexerToken.Quote;

default:

return LexerToken.Untyped;

}

}

After:

enum LexerToken

{

Equals = EQUALS,

Quote = QUOTES,

Untyped

}

private static LexerToken getToken(byte c)

{

switch (c)

{

case EQUALS:

case QUOTE:

return (LexerToken)c;

default:

return LexerToken.Untyped;

}

}

Benefits:

- Less code

- Some might argue that it appears more simple

Like the previous example and by the title of the this post, one might guess the results. Believe it or not, the refactored version was slower by about 10-15%. I have no idea why the slowdown, as I refuse to believe that casting could cause a significant bottleneck.

In the end, after about two hours of refactoring and profiling, and an hour spent writing this post, the code appears the same. Some might be discouraged by this fact, but I feel that this reassures the fact that I write high performance code.

This kind of micro-optimization should not be pursued in the general case. I was profiling using a 10MB file and the original method ran in .135 seconds, switch refactoring ran in .17 seconds, and read refactoring ran in .24 seconds. As can be imagined, the file was in memory.

Comments

If you'd like to leave a comment, please email [email protected]