The Cost of TLS in Java and Solutions

Published on:Update: February 5th, 2018: For a new solution see Dropwizard 1.3 Upcoming TLS Improvements

When someone says that SSL/TLS is slow, I used to chuckle and point people to Is TLS Fast Yet?. There a couple of headliner quotes on the main page from engineers at Google and Facebook, which I’ll copy here for convenience.

On our production frontend machines, SSL/TLS accounts for less than 1% of the CPU load, less than 10 KB of memory per connection and less than 2% of network overhead. Many people believe that SSL/TLS takes a lot of CPU time and we hope the preceding numbers will help to dispel that

- Adam Langley, Google

We have deployed TLS at a large scale using both hardware and software load balancers. We have found that modern software-based TLS implementations running on commodity CPUs are fast enough to handle heavy HTTPS traffic load without needing to resort to dedicated cryptographic hardware.

- Doug Beaver, Facebook

It seems like TLS should be a non-issue, but hold on. Looking at their server performance section chart, I see what seems to be mostly generic web servers used for serving static content or used as some sort of proxy. What about application web servers / frameworks? You’ll have to dig into your application server to see if they provide a fast TLS implementation. As for me, I care mostly for Dropwizard, which uses the Jetty web server underneath.

From the maintainers of Jetty:

Jetty [..] fully supports TLS, but that currently means we need to use the slow java TLS implementation.

This implicates all java web servers that use the default java TLS implementation. Looking to the Java webserver Netty, they exalt the benefits of OpenSSL:

In local testing, we’ve seen performance improvements of 3x over the JDK. GCM, which is used by the only cipher suite required by the HTTP/2 RFC, is 10-500x faster.

Ok let’s measure the performance impact, we’re going to create a simple echo server that responds to the client with the same data that is sent to the server. This is how one would make an efficient Dropwizard echo server.

public class EchoApplication extends Application<EchoConfiguration> {

public static void main(final String[] args) throws Exception {

new EchoApplication().run(args);

}

@Override

public void initialize(final Bootstrap<EchoConfiguration> bootstrap) {

}

@Override

public void run(final EchoConfiguration configuration,

final Environment environment) {

environment.jersey().register(new EchoResource());

}

@Path("/")

@Produces(MediaType.APPLICATION_OCTET_STREAM)

public static class EchoResource {

@POST

public StreamingOutput echo(InputStream in) {

return output -> ByteStreams.copy(in, output);

}

}

}

We’re going to put this application through three load tests:

- Plain HTTP

- HTTPS See Dropwizard 1.0 checklist

- HTTPS that is terminated by a local HAProxy (described later)

When I present the numbers, I want to de-emphasize the raw results and look to more of a comparison between the tests to gain insight.

Results

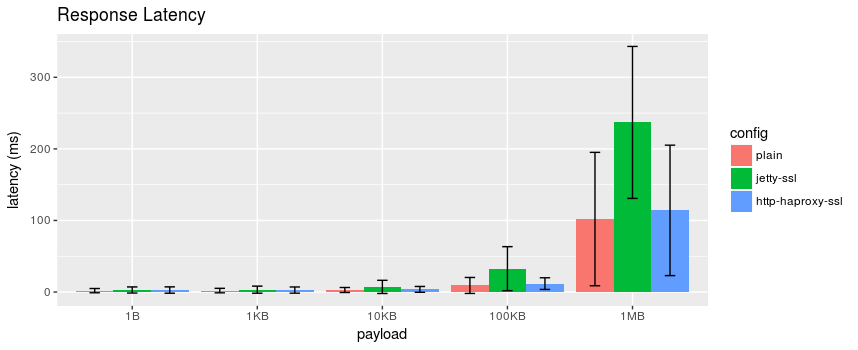

Response latency. Error bars represent a single standard deviation

Here we can see that for our echo server, the larger payloads take twice as long to be serviced when the JVM does the TLS. Also the standard deviations are quite large, but not sure what to make of that. Let’s look at the relative response times for each payload.

df <- read_csv("~/Documents/ssl-termination.csv")

df$config <- factor(df$config, levels=c("plain", "jetty-ssl", "http-haproxy-ssl"))

limits <- aes(ymax = latency + stdev, ymin = latency - stdev)

dodge <- position_dodge(width=0.9)

ggplot(df, aes(fill=config,y=latency,x=payload)) +

geom_bar(position=dodge, stat="identity") +

scale_x_discrete(limits=c("1B", "1KB", "10KB", "100KB", "1MB")) +

labs(title = "Response Latency") + ylab("latency (ms)") +

geom_errorbar(limits, position=dodge, width=0.25)

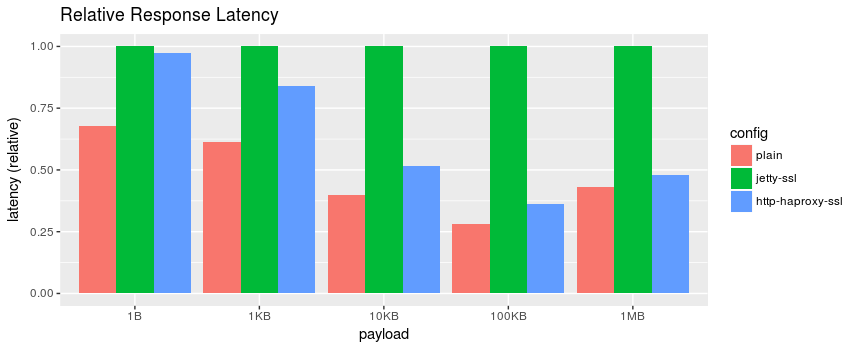

Relative Response Latency.

df_rel <- df %>%

group_by(payload) %>%

mutate(latency = latency / max(latency))

ggplot(df_rel, aes(fill=config,y=latency,x=payload)) +

geom_bar(position="dodge", stat="identity") +

scale_x_discrete(limits=c("1B", "1KB", "10KB", "100KB", "1MB")) +

labs(title = "Relative Response Latency") + ylab("latency (relative)")

At smaller payloads the benefits start to fall off, but it does appear that using HAProxy as a TLS termination proxy was beneficial in all cases.

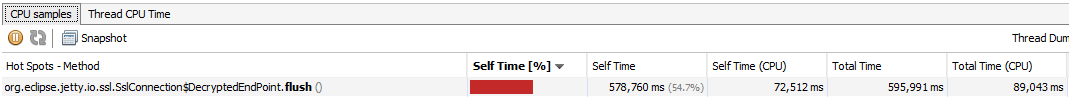

Before everyone starts jumping ship to TLS termination, you should only consider it if a large chunk of the processing time is spent in TLS. In the echo server, I measured the performance impact using jvisualvm. I’ve used jvisualvm before in Turning Dropwizard Performance up to Eleven, and the screenshot below depicts the top function for self time.

JDK SSL Operations showing up as a code hot spot when profiling

Notice that it is over 50%? Yeah, that’s a sign you should investigate TLS termination.

Benchmarking

For benchmarking, I used wrk with a lua script that sets the body contents. The script below will set the body to a size of single letter.

wrk.method = "POST"

wrk.body = string.rep("a", 1)

I created scripts for 1 byte, 1, 10, 100 kilobyte, and 1 megabyte.

Executed like the following:

wrk -c 40 -d 60s -t 8 -s wrk1.lua https://192.168.137.52:9443/

HAProxy

This is my first experience with the TCP/HTTP load balancer, HAProxy. It might seem odd to use a load balancer in front a single instance, but one of the features is TLS termination. By using HAProxy as a TLS terminator the hope is that we get the best of both worlds: fast TLS and encrypted traffic between machines.

One of the things that attracted me to HAProxy is that we really don’t want to pay the cost of an HTTP proxy. HAProxy doesn’t need to examine the contents at all. We just need it to decrypt and forward the contents to a single server. It is unclear if nginx, which I’m more familiar with, can avoid this overhead with its TCP load balancer

After installing HAProxy the following lines were added to the main config

file of /etc/haproxy/haproxy.cfg.

frontend ft_myapp

bind 192.168.137.52:9443 ssl crt /etc/ssl/private/jetty.key.pem

mode tcp

option tcplog

default_backend bk_myapp

backend bk_myapp

server srv localhost:8085

One thing that tripped me up is that the crt file specified must contain the

private key unencrypted!

I did discover that TLS termination can be very expensive CPU-wise. HAProxy

was fully saturating a single core in the benchmarks. Turns out by default,

HAProxy will only utilize a single core. The workaround is to bump up nbproc

like so:

global

nbproc 2

cpu-map 1 0

cpu-map 2 1

HAProxy will now fully utilize two cores, but the documentation actually discourages this mode because it’s hard to debug. I was unable to find a better alternative, and others who have found TLS termination to be expensive use nbproc as well. I’m not too worried about the difficulty in debugging since HAProxy is just forwarding to a single local server.

The Future with Unix Domain Sockets

Unix domain sockets are local files for interprocess communication and offer better performance than TCP connections over loopback. Jetty (the server contained within Dropwizard) recently released a version that is able to accept incoming connections over a unix socket. This feature was the original impetus for this post. If you’re wondering why I haven’t included the numbers here, it’s because there are bugs that appear under load. Once they get hammered out, I’ll have post a follow up article.

I did want to briefly mention how hard it was to test this setup. Testing with curl required curl 7.50, which is only found on the latest distros, so one will probably need to compile it from source.

curl --unix-socket /var/run/dropwizard.sock -dblah http://localhost/app/

Curl will need to have permissions to write to this socket.

HAProxy can send data through unix sockets but the internet is fairly sparse on this information. The only prevalent link I found was “Can haproxy be used to balance unix sockets”, which gives an idea of how it should be done. I tried for several hours to get it working, but it wasn’t until I found an obscure mailing list (which unfortunately, I have forgotten) that helped a bit. The key seemed to be using unix-bind

global

unix-bind prefix /tmp mode 770 group haproxy

backend bk_myapp

server srv3 [email protected]

And then placing dropwizard.sock in /var/lib/haproxy such that haproxy can read and write from it.

Conclusion

SSL/TLS can be expensive when working with non-optimized implementations (especially Java’s). In cases like these, it may be beneficial to offload TLS termination to a local proxy. However, since this is an increase complexity in the system, make sure to measure server performance to confirm that SSL is causing the slowdown. There is room for improvement using unix sockets once the surrounding libraries have stabilized.

Comments

If you'd like to leave a comment, please email [email protected]