My Bet on Rust has been Vindicated

Published on:Table of Contents

As a side project, I’m writing an Europa Universalis IV (EU4) leaderboard and in-browser save file analyzer called Rakaly. No need to be familiar with the game or the app, but feel free to check Rakaly out if you want, we’re just getting started.

I’m writing this post as whenever Rust is posted on the internet, it tends to elicit polar opposite responses: the evangelists and the disillusioned. Both can be correct in their viewpoints, but when one is neck deep in a Rust side project and stumbles across a discussion that is particularly critical of Rust, self doubt may creep into thoughts. And while self doubt isn’t inherently bad (second guessing can be beneficial), too much doubt can cause one to disengage with their hobby. This post is me sharing struggles seen along the way and realizing that the wins Rust gave me far outweigh the struggles.

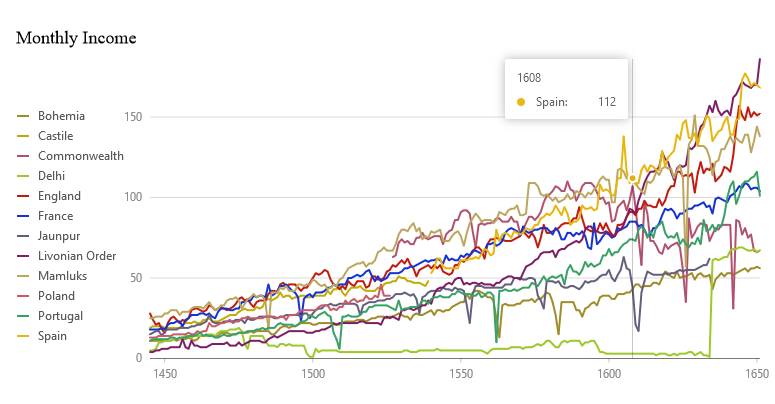

One of the visualizations created by Rakaly based on game data

It may seem surprising that someone who has been programming Rust as a hobby for 4 years would feel the need to have vindication now, but this project was my first user facing app with over 15k lines of Rust, and while I’ve programming Rust for a “long” time, I would only consider myself proficient and not an expert by any means.

Also for those who need to hear it: any side project that you’re proud of is a success.

As one might imagine from the title of this post, Rakaly is mainly written in Rust. This single Rust code base serves several use cases:

- The server side app which receives an EU4 game save file, runs diagnostics, and stores the save.

- The wasm bundle which runs the same diagnostics client side

- A shared library that I can give to third party developers so they can integrate the functionality.

In total: a backend server, client side wasm, and a shared library all are derived from the same code base. I can’t imagine doing this in any other language.

Why the focus on client side

Before diving into Rust specifics, I want to take a moment to share my philosophy that it’s important to offer a service that requires a minimal amount of friction for visitors and myself. A solution that can run self contained on the client is perfect. This is where Rust compiled to WASM (accessed through web workers) is critical.

Visitors can jump right in with just their browser without needing an account. No need to download a potentially sketchy executable or give credentials. They just drag and drop a file and all the processing is done inside the browser. Spotty internet, bandwidth limitations, or a slow or down server doesn’t inhibit the visitor’s experience.

For me a client side solution means that the server isn’t critical. A spike in visitors doesn’t necessarily mean a load spike on the server. If no cross user state needs persistence, one may not even need a backend. That’s the route I took with the Rocket League replay parser I wrote (can be seen hosted here and there’s even a community written Python wrapper – I seem to like to program game tools more than play them). With a leaderboard though, backend state is necessary for Rakaly. Though I take comfort in knowing that if I ever want to sunset the app whether for cost or time savings, the users will still be able to use the app, as all the static assets are stored in Cloudflare (and the use of Cloudflare workers and B2 will allow even uploaded game save files to remain useable).

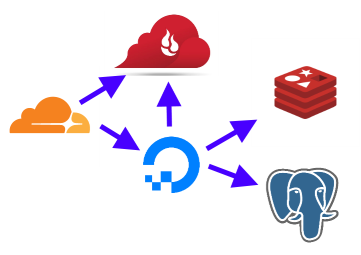

For those curious about the architecture:

- Cloudflare Workers (static assets + fetch S3 files)

- Backblaze B2 (S3 compatible storage)

- DigitalOcean (VPS)

- Postgres (relational data)

- Redis (leaderboard, session storage)

Cloudflare ❤️ Backblaze ❤️ DigitalOcean ❤️ Postgres ❤️ Redis

Enough architecture, more Rust.

Wins

Sharing. The headlining win is the shared core. Simplifying things a bit, there are essentially 4 crates in this project:

- The parser which sets the foundation for all the other crates

- The server app written with warp

- A C compatible dynamic library with a nice header file created through cbindgen. Other parties have asked for Rakaly functionality (specifically in a C++ wx app) and this fits the bill perfectly.

- The wasm interface that is translated by wasm-bindgen

- Then there is the react frontend that communicates with the wasm through a web worker (can’t block the UI thread!)

- Additional use cases can be added with ease. I’m thinking about one day adding a native app (different use case than web) and there’ll be an incredible amount of code reuse.

All this sharing allows one person development teams to be anywhere and everywhere at once. Nothing is off limits.

Serde. I’m able to write regular structs that happen to hold deserialized data. It’s important to not have additional ceremony in parsing the many fields as there are over 5000 unique fields that occur in a save. Having to write any additional code to support a field would be overwhelming.

To give an example, below is the only struct needed to deserialize and work with the version that the game save file declares itself to be. Notice that it has the logic to deal with a misspelling in the data, but the code sees the correct spelling.

#[derive(Debug, Clone, Deserialize, Serialize, PartialEq)]

pub struct SavegameVersion {

pub first: u16,

pub second: u16,

pub third: u16,

#[serde(rename(deserialize = "forth"))]

pub fourth: u16,

pub name: String,

}

That’s it. That’s 5 fields covered, only 4,995 more to go.

Additional benefit of serde is that it is parser agnostic. Even though save files can come in two flavors: JSON-like and binary (keys are identified by a 16 bit lookup table), both formats will be deserialized into the same struct. I shudder at the thought of where I would be in this project if I needed to duplicate every field, one for plaintext and another for binary. Serde is a huge productivity gain.

Speed. Save files are zip files that when inflated can reach in excess of 100MB. Parsing these files quickly is of the utmost importance. The same parsing code is executed on the client and server side and so must be fast for both. With Rust, files are parsed and deserialized at over 500 MB/s on the server side and around 100 MB/s through client side wasm. Could other languages be as fast or faster? Sure, especially if they are written in the style of simdjson, but out of the parsers I’ve seen or written myself in years past, none can hold a candle to the performance of Rust.

Tools: The tools that Rust and the Rust community provides are invaluable:

- Criterion: allows me to benchmark with confidence, so I can quantify performance differences in the changes I’m making (I’ve written about Rust benchmarking before)

- Cargo fuzz: gives me the confidence that I can accept untrusted user input without nefarious side effects.

- Cross: cross compiling a musl build for either aarch64 or x86 allows me to copy the executable anywhere and it’ll just work or I can easily use it in the distroless docker containers

- Rustfmt: I don’t have to spend cycles thinking how something is formatted

- Clippy: catches silly mistakes

- And more: shoutout to cargo asm, cargo bloat, cargo outdated, and cargo release

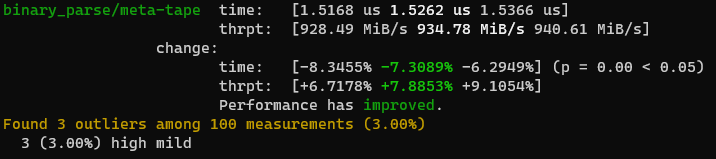

Below is a screenshot from a criterion run showing that the core parsing logic for the binary format is almost reaching 1000 MB/s.

Criterion benchmarking parsing logic

Struggles

With Rust wins comes Rust struggles. Some will be new struggles that may not have been discussed yet, while others are well known, but it’s important to list them all as they all have a share in casting doubt.

Serde. Serde is both a win and struggle. The underlying format of the game save files is proprietary and undocumented – it’s JSON-like but definitely not JSON. Here’s a snippet.

{

"core": "core1",

"nums": [1, 2, 3, 4, 5],

"core": "core2"

}

Notice the core field occurs multiple times and the occurrences don’t follow one another. The end result is that data should be deserialized into:

struct MyDocument {

core: Vec<String>,

nums: Vec<u8>,

}

Since serde doesn’t support aggregating multiple occurrences of a field, I needed to buffer the whole document in memory and provide a small translation layer where I’d gather similarly named fields in one place for serde. I wrote a small segment about this inside another post with the intimidating title: Parsing Performance Improvement with Tapes and Spatial Locality. While ideally I’d like to parse the document iteratively, it hasn’t been a blocker on either the client or server side (probably because people are already used to browsers being memory hogs).

2020-08-12 Update: a custom derive macro was created that will aggregate fields marked with #[jomini(duplicated)]. And while it would have been preferable to have this natively in serde, there ended up being a big enough performance improvement around edge cases that made this macro worth the time to maintain.

Compile times. Everyone already expected this, so I’ll keep it short. There’s about 15k lines of Rust total and building the server crate on my 8 core machine takes 9 minutes for a clean build and 6 minutes for incremental. It’s not great, but I haven’t found it intolerable yet. For coping mechanisms: write more code between rebuilds, move code into a lower level crate (compiling a lower level crate will shave compile times down to a hair under a minute, but there’s only so much that can be done when using crates like serde (serde seems full of pros and cons)), or try to be productive during compiles and watch (Rust) youtube videos. On a lower specced machine, compile times do verge on intolerable – my mid-range laptop (about 7 years old) takes a bit over 25 minutes for a clean compile and will make the machine occasionally unresponsive. Been thinking about buying a new laptop.

Test execution times. This may or may not come as a surprise but when working with files that can reach 100MB (and this 100MB can’t really be whittled down), tests can be slow. I previously lauded Rust for how performant the code can be, but in debug mode the opposite is true. I don’t think I’ve seen a language with such a performance gap between debug and production mode. I’ll leave a snippet of my Cargo.toml to explain things.

# We override the test profile so that our tests run in a tolerable time as

# some of the asset files are heavyweight and can take a significant amount of

# time. Here is some timing data recorded to run one test:

#

# cargo test 0m15.037s

# cargo test (opt-level=3) 0m9.644s

# cargo test (+lto=thin) 0m0.907s

# cargo test --release 0m0.620s

[profile.test]

opt-level = 3

lto = "thin"

So that’s 15s vs 0.6s to run one test. An incredible difference. The tests ran 25x faster in release mode, which may not make a big difference with miniscule tests (25x a tiny number can also be tiny), but these tests are anything but small. So the fix is to essentially configure tests to be optimized before running. As can be imagined, this has a detrimental effect on test compilation, but at least I’m not waiting a half hour for the test suite to finish.

Conclusion

The moment of vindication came after I announced Rakaly to the world. 3rd party developers saw it and wanted some of the functionality in their C++ app. I hadn’t considered this use case. If I had written Rakaly in another language I might be hard pressed to meet this use case and create a shared library for them to integrate. Thankfully, it wasn’t hard and I was able to produce a shared library for them.

I do want to quickly mention that Go could also satisfy every use case as well (I recently learned one can create shared libraries in Go), but it is discouraging that the official Go wiki states that the created wasm bundle will be a minimum of 2MB. TinyGo is listed as a solution, though my preference is still Rust as it has official great support for wasm.

So yes, it should go without saying that Rakaly could have been implemented in other languages (eg: C, C++, Go), but Rust was able to deliver the project quickly, safely, and without too many compromises. This is why I like Rust and will continue to use it, as it can be adapted to whatever I need next.

Comments

If you'd like to leave a comment, please email [email protected]

I like the perspective of client-side wasm as a new way to develop in-browser apps that today need server backends because javascript is poor in-browser heavy-processing. These wasm-apps are web apps but needing to make api calls to backend for processing. Powerful indeed, as many existing web apps can be written this way..

see for example https://perspective.finos.org/ with mind-blowing performance, but it in C++ compiled into WASM

I’ve learned a lot while reading this :)

Is this project open source? I’d love to see how everything is set up.

Interesting overview. And some interesting things in the list of tools

Yes, this project is open sourced: https://github.com/rakaly