Leveraging Rust to Bundle Node Native Modules and Wasm into an Isomorphic NPM Package

Published on:Table of Contents

I recently published highwayhasher as an npm package. Highwayhasher exposes Google’s HighwayHash to the JS world through the pure Rust reimplementation. There will be a lot to unpack, so here’s a rundown of the library’s features that we’ll cover:

- ✔ Isomorphic: Run on node and in the browser with the same API

- ✔ Fast: Generate hashes at over 700 MB/s when running in web assembly

- ✔ Faster: Generate hashes at over 8 GB/s when running on native hardware

- ✔ Self-contained: Zero runtime dependencies

- ✔ Accessible: Prebuilt native modules means no build dependencies

- ✔ Small: 10kB when minified and gzipped for browsers

- ✔ Run same test suite in node and browser environments

- ✔ Minimal dev dependencies

To put the API features concretely, given the following snippet:

const { HighwayHash } = require("highwayhasher");

(async function () {

const keyData = Uint8Array.from(new Array(32).fill(1));

const hash = await HighwayHash.load(keyData);

hash.append(Uint8Array.from([0]));

console.log(hash.finalize64());

})();

- Browsers will use the Wasm implementation (10kB)

- Windows, Mac, and Linux environments will call into their respective pre-compiled node native module

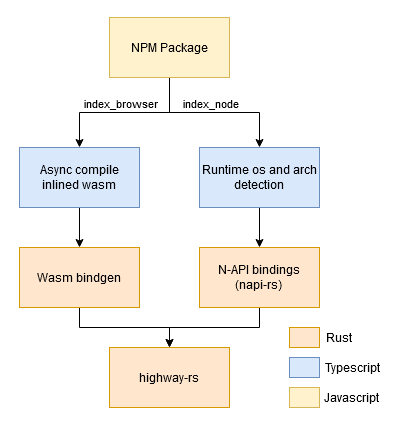

Here is how highwayhasher is structured at a high level that you’ll be able to reference back to

Architecture of highwayhasher

Why Wasm and Native Modules

I’m a huge fan of Web Assembly (Wasm). I find it immensely powerful to take Rust code and compile to Wasm and ship the exact same js + Wasm to both web and server side users. But Wasm is not a panacea. Two major shortcoming are apparent:

- Lack of access to platform dependent APIs (like my friends over at Ditto who tap into platform specific APIs like Core Bluetooth. I have Ditto to thank for piquing my interest in isomorphic NPM packages grounded in Rust and we worked together to develop some of the techniques you see here).

- Lack of access to hardware intrinsics (ie: SIMD instructions)

The good news is that these problems are diligently being worked on with the WebAssembly Systems Interface (WASI) and an official SIMD proposal for Wasm.

Until these solutions have matured we’ll need to tie directly into Node.

Bundling

At the top of the architecture stack, the NPM package has four entry points:

- index_node (es module)

- index_node (cjs module)

- index_browser (es module)

- index_browser (cjs module)

This is accomplished by the following slice from package.json:

{

"main": "./dist/node/cjs/index_node.js",

"module": "./dist/node/es/index_node.js",

"browser": {

"./dist/node/cjs/index_node.js": "./dist/browser/cjs/index_browser.js",

"./dist/node/es/index_node.js": "./dist/browser/es/index_browser.js"

}

}

The above package.json informs downstream consumers:

- If you’re using highwayhasher in a nodejs environment, call into the

mainentrypoint - If you’re using highwayhasher in a nodejs environment with es modules enabled, call into the

moduleentrypoint. - If you’re using highwayhasher in a browser environment (ie: highwayhasher is referenced in an app that is being bundled by webpack, rollup, or another tool that respects the

browserpackage.json spec), replace the call into node module with the browser’s es module (most likely) or browser’s cjs module (least likely).

This is the same technique that most isomorphic packages invest in (packages that expose the same API to node and the browser).

The contents of index_browser.ts and index_node.ts can be shockingly short as their only purpose is to set up exports. Below we see index_node.ts which aliases the NativeHighwayHash class to HighwayHash so that consumers can remain functionally unaware of the underlying type.

export { NativeHighwayHash as HighwayHash } from "./native";

export type { IHash } from "./model";

The index_browser.ts is the exact same except that WasmHighwayHash aliases to HighwayHash

It’s important for these aliases to implement the same interface:

export class NativeHighwayHash {

static async load(key: Uint8Array | null | undefined): Promise<IHash> {

return new NativeHash(key);

}

}

It may seem odd to stick a synchronous function behind async and have it return an IHash instead of the concrete type, but this is all done so that WasmHighwayHash and NativeHighwayHash operate exactly the same for the user.

The async is necessary so that the Wasm gets a chance to compile. As I’ve written before, I always inline Wasm in browser libraries so that it doesn’t require a fetch at runtime, causing the use of Wasm to nearly become an implementation detail. I leave the Wasm as a file for node users to reap whatever measly size and speed benefits come from not needing to base64 decode data (base64 is how Wasm is encoded when inlined). I stressed that Wasm can nearly become an implementation detail as Wasm forced us to color the load function with async, which comes with an ergonomic cost on node as top level async support is not widespread. This means that theoretically the entirely synchronous native implementation can be published to allow code like:

const { HighwayHash } = require("highwayhasher");

const keyData = Uint8Array.from(new Array(32).fill(1));

const hash = new HighwayHash(keyData);

const out = hash.finalize64();

But since our goal is an isomorphic API, we have to make ergonomic sacrifices and force all users to asychronously load modules.

const { HighwayHash } = require("highwayhasher");

(async function () {

const keyData = Uint8Array.from(new Array(32).fill(1));

const hash = await HighwayHash.load(keyData);

hash.append(Uint8Array.from([0]));

const out = hash.finalize64();

})();

This cost can be partially mitigated when the loading of a module needs to be asynchronous but the construction can be synchronous. In the case of highwayhasher, construction of a hash from key data is synchronous for both node and browsers. Splitting these instructions can make it more apparent that the act of hashing data is done synchronously (the following example is contrived but makes more sense when embedded in a larger application):

import { HighwayHash } from "highwayhasher";

function hashData64(data, hash, key) {

const hasher = hash.create(key);

hasher.append(data);

return hasher.finalize64();

}

(async function () {

const keyData = Uint8Array.from(new Array(32).fill(1));

const hash = await HighwayHash.loadModule();

hashData64(Uint8Array.from([0]), hash, keyData);

})();

At this point, I believe we’ve reached a local maximum of ergonomics given the constraints of the underlying APIs.

In addition to massaging function signatures to be equivalent between implementations, the typescript wrappers around the underlying rust bindings also take care of some of the validation and errors as these tend to be more succinctly represented in typescript than rust bindings. For instance it is easier to write rust bindings that assume hash initialization takes a non-null byte array of length 0 (to trigger the default) or 32. Then the wrappers can guard against the other possibilities:

class WasmHash extends WasmHighway implements IHash {

constructor(key: Uint8Array | null | undefined) {

if (key && key.length != 32) {

throw new Error("expected the key buffer to be 32 bytes long");

}

super(key || new Uint8Array());

}

}

Errors that do originate from rust bindings should be uniform in structure between the native and Wasm implementations to simplify how the wrapper code propogates it to the user.

Wasm

There’s not too much novelty on the Wasm implementation side – it’s a pretty cut and dry wasm-bindgen implementation.

I have a bit of an opinionated style to Wasm libraries which is highlighted in an different article, but it essentially boils down to:

- Prefer invoking

wasm-pack build -t webinstead of relying on additional middleman dependencies for bundlers (and if wasm-pack is sunset, I’ll move back to wasm-bindgen-cli) - Replace the

import.meta.urlfound in the generated output so that webpack < 5 can digest the module - Inline the Wasm for browsers

That’s it, minimal magic.

The default allocator is replaced with wee_alloc, which reduced the raw Wasm file size from 21Kb to 15Kb. Since the hash implementation doesn’t make heap allocations, the performance of the allocator is nearly irrelevant. I even tried creating a null allocator to see if it was possible to omit one, but function arguments are allocated so that attempt died quickly. For the curious, the null allocator reduced Wasm file size from 15Kb to 12Kb.

N-API

In order to instruct Node to call into our rust library, we must create an addon. These addons are typically written in C/C++ and are dynamically linked. From the nodejs docs:

There are three options for implementing addons: N-API, NAN (Native Abstractions for Node.js), or direct use of internal V8, libuv and Node.js libraries. Unless there is a need for direct access to functionality which is not exposed by N-API, use N-API.

Since node recommends N-API, the next step is taking advantage of N-API from Rust. There are three rust libraries to write N-API bindings:

- neon via the

napi-runtimefeature - napi-rs

- node-bindgen

After testing all three, I ended up settling on napi-rs due to it’s minimalistic approach. Neon is in a transition stage and node_bindgen had some growing pains that affected app behavior, so I figured the lowest level of the 3 (napi-rs) is the safest best and serves as a learning experience for the N-API platform. I’m free to change my mind in the future, as what library is used to bind to N-API should just be an implementation detail.

I want to take a moment and extol these rust N-API projects. The projects are actively developed and the maintainers are quick to respond to questions / bug reports and are courteous. I tend to hold rust projects to a higher standard and these projects were still able to stand above the rest. Despite my decision to go with napi-rs for highwayhasher, I think any of these crates are viable for an N-API project: neon for the popularity, napi-rs for the minimalism, or node-bindgen for the ergonomics.

Runtime Detection

Napi-rs contains a CLI component, napi, to help build the distributable N-API library:

napi build -c --platform --release

On my machine, the above will spit out a file called:

highwayhasher.linux-x64-gnu.node

So the built library will only work on 64bit linux.

It’s quite easy to import the library like any other:

const module = require(`highwayhasher.linux-x64-gnu.node`);

The issue is that this require is platform specific, so in order to compute the correct require request there is a @node-rs/helper package. Or if you’re like me and don’t think a hashing library should have any dependencies (as a user, it is incredibly frustrating when a deeply nested dependency just starts breaking), one can approximate it with:

import { platform, arch } from "os";

const getAbi = (platform: string): string => {

switch (platform) {

case "linux":

return "-gnu";

case "win32":

return "-msvc";

default:

return ""

}

}

const MODULE_NAME = "highwayhasher";

const PLATFORM = platform();

const ABI = getAbi(PLATFORM);

const module = require(`../${MODULE_NAME}.${PLATFORM}-${arch()}${ABI}.node`);

Interestingly the underlying bug in the issue I linked above was due to a misuse of N-API, and while I would never voice this out loud and be accused of unnecessary rust evangelism, I’m curious if rust N-API bindings could have prevented this class of bugs.

One may be alarmed that we’re importing functions from the node specific os module in a package designed for browser use, but we’re safe as long as the above snippet is never referenced from the root of index_browser. This is why we define multiple entry points for our package.

I mark all node imports (in highwayhasher’s case, it’s just os) as external in the rollup config to let rollup know to not resolve it (and I wasn’t about to add a dev dependency for just this functionality).

Performance

HighwayHash is a fast enough hash function that the performance penalty of leaving the land of JS is tangible.

To give an example, the snippet has 3 N-API calls:

function hashData64(data, hash, key) {

const hasher = hash.create(key);

hasher.append(data);

return hasher.finalize64();

}

If we replace it with a single N-API call that initializes, hashes, and finalizes in a single step there can be major performance benefits:

- Hashing data with a length of 1 doubled in throughput (0.17 MB/s to 0.38 MB/s)

- Even at larger input sizes (~1 MB) there can be a throughput improvement of 10-15%.

So when performance is key, one should design their API to allow users to call methods that reduce the number of N-API calls.

Interestingly, the Wasm side isn’t as nearly as affected, but we must still add these new API methods to the Wasm side to adhere to the isomorphic API requirement. The one nice thing is that we can implement the new API entirely in JS when implementing it in rust brings no benefits.

Testing

One of the goals I had in developing highwayhasher is to reuse the same test code for both node and browser environments as any feature or bugfix found in one must be equally represented in the other. I’ve noticed that some packages just mock the browser environment in node, but this doesn’t give me confidence. I’m all for simplicity, but I need tests that execute in the browser. Writing one set of tests proved to be quite challenging (I had erroneously assumed that puppeteer would make browser testing trivial) but success was achieved.

The trick is to write jest tests and execute them as usual with the node environment, and then execute the same tests with karmatic, a zero-config browser test environment with a jest-like API (it is really jasmine). Karmatic is truly no-config and since it uses webpack under the covers, it verifies that the browser directive given in the package.json resolves correctly.

Karmatic isn’t perfect:

- A jest-like API means it isn’t jest, so one must write tests using the most simple constructs. Alternatively, drop jest in favor of explicitly relying on jasmine for both node and browser tests

- Karmatic isn’t as well maintained as I’d like to see (no disrespect to the maintainers who have a lot on their plates and have more pressing matters), but ideally there’d be a recent release for changes stuck on master and some development to support more recent dependencies.

So while Karmatic could be better, I still highly recommend it for anyone writing isomorphic libraries, as reusing tests to ensure that the browser part of library works is invaluable.

Releasing

With the native implementation relying on platform specifics, creating a release workflow is critical. Github Actions is utilized so that we have one platform to build our windows, linux, and mac libraries. Here is the Github workflow:

- For each platform (window, linux, and mac):

- Build the project

- Test the project

- Upload the platform specific library as an artifact

- If all platforms pass:

- Download all artifacts

- If tagged release, create github release and upload all artifacts

Since I do not yet feel comfortable uploading straight to NPM, there is a manual step at the end where a local script downloads all the artifacts from the latest release and I publish the package from my personal machine. I understand that others feel differently and will upload to NPM from their Github Actions and that’s fine too.

Release Verification

This is not strictly related to the topic on hand, but I wanted to take a quick aside to explain release verification. Even though highwayhasher is conceptually simple there are a lot of pieces that are glued together and it’s possible that one of these pieces falls apart when the package is actually uploaded. For instance, I botched a release of highwayhasher when I uploaded zip files instead of the node libraries

In an attempt to discover these issues as soon as possible, highwayhasher has a sub-project setup that installs the latest version of itself and reruns the test suite. This sub-project is executed in CI under the usual suspects (windows, mac, and linux). The CI workflow is executed manually after every release (one can’t trigger it after every commit as API changes will cause the prior release to fail). Setting up this workflow is hopefully low hanging fruit as the process is to transform the test suite from:

const { HighwayHash, WasmHighwayHash } = require("..");

to

const { HighwayHash, WasmHighwayHash } = require("highwayhasher");

And then run the tests as usual.

It’s not just tests that benefit from release verification – benchmarks too. In my lovely discussion with the maintainer of the C++ HighwayHash bindings, we found that there was a severe performance regression for highwayhasher. The cause was that the linux distributable was built on Ubuntu 18.04 whereas I had done my tests previously on Ubuntu 20.04. Switching the CI machine to Ubuntu 20.04 and cutting a new release fixed the issue. The exact cause remains a mystery. I initially thought it was the Rust version but that turned out false. Whatever the cause, having a benchmark suite handy allows one to quickly reproduce the issue and confirm the solution.

Cross Compilation

There are more end user systems than the machines github provides. For instance, github does not provide arm machines. The Rust community has an established way to cross compile assets to other architectures: cross.

Unfortunately the napi-rs cli component mentioned earlier is a thin wrapper around cargo and there appears to be no extension point for substituting in cross (and there looks to be no plans of adding this). Other Rust N-API projects appear to achieve cross compilation by registering QEMU and architecture dependent packages like gcc-aarch64-linux-gnu. That’s fine, but since I wasn’t able to reproduce the workflow on a local VM, I decided to stick with cross, as that is what I know. As always, I reserve the right to be wrong here!

Cross isn’t perfect, I’d like to cross compile to the new hotness: aarch64-apple-darwin, but this is not yet possible. At least I’m able to push out AWS graviton compatible assets (aarch64-unknown-linux-gnu) (tested to ensure it works) with ease.

Building the node native addons directly (they are, after all, just plain dynamically linked libraries) with cargo / cross meant that I could drop the napi cli dependency. Some additional tweaks were needed:

- Distributing the shared libraries with platform triples in their name

- Copying and renaming to the distribution directory. Linux shared libraries have

.soextension, windows has.dlland macs have.dylib. These are all remapped to have.nodeextension - Updating the runtime detection logic to something like:

const getTriple = (): string => {

const platform = os.platform();

const arch = os.arch();

if (platform === "linux" && arch === "x64") {

return "x86_64-unknown-linux-gnu";

} else if (platform === "linux" && arch === "arm64") {

return "aarch64-unknown-linux-gnu";

} else if (platform === "linux" && arch === "arm") {

return "armv7-unknown-linux-gnueabihf";

} else if (platform === "darwin" && arch === "x64") {

return "x86_64-apple-darwin";

} else if (platform === "win32" && arch == "x64") {

return "x86_64-pc-windows-msvc";

} else {

throw new Error(`unknown platform-arch: ${platform}-${arch}`)

}

};

Seems tolerable – if a bit home-grown.

One Über Package

The highwayhasher NPM package contains all platform specific modules included. If you were to inspect the package you’d see a directory structure like:

.

├── cjs

├── es

├── highwayhasher.aarch64-unknown-linux-gnu.node

├── highwayhasher.armv7-unknown-linux-gnueabihf.node

├── highwayhasher.x86_64-apple-darwin.node

├── highwayhasher.x86_64-pc-windows-msvc.node

└── highwayhasher.x86_64-unknown-linux-gnu.node

I call this an über package as everything is included (in the same vein as über jars).

There are a few other ways to distribute this package. Napi-rs recommends the “distribution of native addons for different platforms via different npm packages”. If I had gone that route then highwayhasher would have optional dependencies packages like @highwayhasher/linux-x64. Besides the caveats, I prefer to keep things simple and bundled in one package.

A benefit of shipping everything together precompiled is that there are no post install steps. No compilation tools needed, no need to fetch from external resources. It saves downstreams users a lot of pain.

I’m sure there are downsides with this approach. One downside I know is that the package size that one downloads from NPM is larger (a whopping 1.5 Mb (500 Kb compressed) at the time of this writing). It is important to strip (via the strip command) these native modules on linux to cut down on file size (reduces from 1.2 Mb to 300 Kb). In the process setting up the release workflow, I learned that the strip command is platform specific, so each new architecture I add a release for needs to download the appropriate strip

The extra space required for storing unused libraries should be tolerable. It may be an annoyance for electron users that see their executable is now 500 Kb bigger than it should be, but then again electron isn’t going to win any awards for disk space efficiency.

Speaking of electron, that remains untested. It may just work – but it looks like the node_bindgen folks have instructions for their users, and I’m not sure what that means for me.

And I know that not having all architectures supported on day 1 is not great, but it is also not critical. When we bundle everything together we can seamlessly transition arm macs to the x64 library and still reap performance benefits thanks to Rosetta 2 (I have no idea if this works – I’m not sure what x64 nodejs’s os returns on arm mac, but the point remains that one can provide fallbacks). The worst case scenario would be to fallback to the Wasm implementation if no suitable library could be found. Now that async annotation in the synchronous native implementation initialization is looking enticing, as now it may be required to compile Wasm on unsupported native platforms.

Conclusion

This was quite the tour! Lots of topics covered:

- Why node native modules continue to be useful in the face of Wasm

- Writing a node native module via rust N-API bindings

- The performance cost of N-API calls

- The ergonomic cost of supporting isomorphic Wasm implementations

- How to create a package that delegates to different implementations depending on node vs web

- How to test our package in node and browser with the same test suite

- Uploading everything into a single package

One of the reasons I’m writing this post is that I may lack additional insights, so if you have any, please reach out. See discussion on Reddit

Comments

If you'd like to leave a comment, please email [email protected]

This is an impressive walkthrough and library you have wrote. One question I had: did you use wasm to generate typescript bindings for napi-rs? I was trying to find best way to do it and only stumbled over bug: https://github.com/neon-bindings/neon/issues/349. Using your code examples:

would not work for me and typescript complains about lack of definition. Context: I am trying to add napi-rs/neon to obsidian-plugin (https://github.com/obsidianmd/obsidian-sample-plugin)

What would be your recommendation?

I find your library super impressive: https://github.com/nickbabcock/highwayhasher

perhaps you can turn it into a template for cargo generate? It’s pretty much the only working example of the isomorphic package with Rust as the backend for both wasm and napi.

Hey Alex, yeah the lack of typescript definition generated by N-API solutions can be cumbersome to work around, as the

requirestatement returns ananytype. It shouldn’t generate an error though. Maybe you can take a look at thetsconfig.jsonfor inspiration?And hopefully the highwayhasher library is small enough to serve as a template itself